1-DAV-202 Data Management 2024/25

Difference between revisions of "Lweb"

| (11 intermediate revisions by 2 users not shown) | |||

| Line 3: | Line 3: | ||

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| − | ''' | + | <!-- |

| + | '''Use python3! The default `python` command on vyuka server is python 2.7. Some of the packages do not work with python2. If you type `python3` you will get python3. ''' | ||

| + | --> | ||

Sometimes you may be interested in processing data which is available in the form of a website consisting of multiple webpages (for example an e-shop with one page per item or a discussion forum with pages of individual users and individual discussion topics). | Sometimes you may be interested in processing data which is available in the form of a website consisting of multiple webpages (for example an e-shop with one page per item or a discussion forum with pages of individual users and individual discussion topics). | ||

| Line 17: | Line 19: | ||

print(r.text[:10]) | print(r.text[:10]) | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| + | |||

| + | |||

== Parsing webpages == | == Parsing webpages == | ||

| Line 32: | Line 36: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | + | Variable <tt>parsed</tt> now contains a tree of HTML elements. Since the task in our homework is to obtain user comments, we need to find an appropriate element with user comments in the HTML tree. | |

| + | |||

| + | === Exploring structure of HTML document === | ||

| + | |||

| + | * Information you need to extract is located within the structure of the HTML document. | ||

| + | * To find out, how is the document structured, use <tt>Inspect element</tt> feature in Chrome or Firefox (right click on the text of interest within the website). For example this text on the course webpage is located within <tt>LI</tt> element, which is within <tt>UL</tt> element, which is in 4 nested <tt>DIV</tt> elements, one <tt>BODY</tt> element and one <tt>HTML</tt> element. Some of these elements also have a class (starting with a dot) or an ID (starting with <tt>#</tt>). | ||

| + | [[File:Web-screenshot1.png|800px]] | ||

| + | [[File:Web-screenshot2.png|800px]] | ||

| + | |||

| − | + | === Extracting elements of interest from HTML tree === | |

| + | |||

| + | * Once we know which HTML elements we need, we can select them from the HTML tree stored in <tt>parsed</tt> variable using <tt>select</tt> method. | ||

| + | * Here we show several examples of finding elements of interest and extracting data from them. | ||

| + | |||

| + | To select all nodes with tag <tt><nowiki><a></nowiki></tt> you might use: | ||

<syntaxhighlight lang="Python"> | <syntaxhighlight lang="Python"> | ||

>>> links = parsed.select('a') | >>> links = parsed.select('a') | ||

| Line 41: | Line 58: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | Getting inner text: | + | Getting inner text from the element is done by: |

<syntaxhighlight lang="Python"> | <syntaxhighlight lang="Python"> | ||

>>> links[1].string | >>> links[1].string | ||

| Line 47: | Line 64: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | + | To select an <tt><nowiki><li></nowiki></tt> element and traverse its children, you can use the following code. | |

| + | Also note difference between attributes <tt>string</tt> and <tt>text</tt> (<tt>text</tt> contains text from all descendants): | ||

<syntaxhighlight lang="Python"> | <syntaxhighlight lang="Python"> | ||

>>> li = parsed.select('li') | >>> li = parsed.select('li') | ||

| Line 61: | Line 79: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | + | Here are examples of more complicated selection of HTML elements. | |

<syntaxhighlight lang="Python"> | <syntaxhighlight lang="Python"> | ||

parsed.select('li i') # tag inside a tag (not direct descendant), returns inner tag | parsed.select('li i') # tag inside a tag (not direct descendant), returns inner tag | ||

parsed.select('li > i') # tag inside a tag (direct descendant), returns inner tag | parsed.select('li > i') # tag inside a tag (direct descendant), returns inner tag | ||

parsed.select('li > i')[0].parent # gets the parent tag | parsed.select('li > i')[0].parent # gets the parent tag | ||

| − | parsed.select('.interlanguage-link-target') # select anything with class = "..." | + | parsed.select('.interlanguage-link-target') # select anything with class = "..." attribute |

| − | parsed.select('a.interlanguage-link-target') # select <a> tag with class = "..." | + | parsed.select('a.interlanguage-link-target') # select <a> tag with class = "..." attribute |

| − | parsed.select('li .interlanguage-link-target') # select <li> tag followed by anything with class = "..." | + | parsed.select('li .interlanguage-link-target') # select <li> tag followed by anything with class = "..." attribute |

parsed.select('#top') # select anything with id="top" | parsed.select('#top') # select anything with id="top" | ||

</syntaxhighlight> | </syntaxhighlight> | ||

* In your code, we recommend following the examples at the beginning of the [http://www.crummy.com/software/BeautifulSoup/bs4/doc/ documentation] and the example of [http://www.crummy.com/software/BeautifulSoup/bs4/doc/#css-selectors CSS selectors]. Also you can check out general [https://www.w3schools.com/cssref/css_selectors.asp syntax] of CSS selectors. | * In your code, we recommend following the examples at the beginning of the [http://www.crummy.com/software/BeautifulSoup/bs4/doc/ documentation] and the example of [http://www.crummy.com/software/BeautifulSoup/bs4/doc/#css-selectors CSS selectors]. Also you can check out general [https://www.w3schools.com/cssref/css_selectors.asp syntax] of CSS selectors. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

== Parsing dates == | == Parsing dates == | ||

| Line 90: | Line 102: | ||

datetime.datetime(2018, 10, 9, 13, 55, 26) | datetime.datetime(2018, 10, 9, 13, 55, 26) | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | * <tt>[https://dateutil.readthedocs.org/en/latest/parser.html dateutil]</tt> package. Beware | + | * <tt>[https://dateutil.readthedocs.org/en/latest/parser.html dateutil]</tt> package. Beware that default setting prefers "month.day.year" format. This can be fixed with <tt>dayfirst</tt> flag. |

<syntaxhighlight lang="Python"> | <syntaxhighlight lang="Python"> | ||

>>> import dateutil.parser | >>> import dateutil.parser | ||

| Line 100: | Line 112: | ||

datetime.datetime(2012, 1, 2, 15, 20, 30) | datetime.datetime(2012, 1, 2, 15, 20, 30) | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | |||

== Other useful tips == | == Other useful tips == | ||

* Don't forget to commit changes to your SQLite3 database (call <tt>db.commit()</tt>). | * Don't forget to commit changes to your SQLite3 database (call <tt>db.commit()</tt>). | ||

* SQL command <tt>CREATE TABLE IF NOT EXISTS</tt> can be useful at the start of your script. | * SQL command <tt>CREATE TABLE IF NOT EXISTS</tt> can be useful at the start of your script. | ||

| − | * Use <tt>screen</tt> command for long running scripts. | + | * Use <tt>screen</tt> command for long-running scripts. It will keep your script running, even if you close the ssh connection (or it drops due to bad wifi). |

| + | ** To get out of <tt>screen</tt> use <tt>Ctrl+A</tt> and then press <tt>D</tt>. | ||

| + | ** To get back in write <tt>screen -r</tt>. | ||

| + | ** More details in [[screen|a separate tutorial]]. | ||

* All packages are installed on our server. If you use your own laptop, you need to install them using <tt>pip</tt> (preferably in an <tt>virtualenv</tt>). | * All packages are installed on our server. If you use your own laptop, you need to install them using <tt>pip</tt> (preferably in an <tt>virtualenv</tt>). | ||

Latest revision as of 08:39, 21 March 2024

Sometimes you may be interested in processing data which is available in the form of a website consisting of multiple webpages (for example an e-shop with one page per item or a discussion forum with pages of individual users and individual discussion topics).

In this lecture, we will extract information from such a website using Python and existing Python libraries. We will store the results in an SQLite database. These results will be analyzed further in the following lectures.

Contents

Scraping webpages

In Python, the simplest tool for downloading webpages is requests package:

import requests

r = requests.get("http://en.wikipedia.org")

print(r.text[:10])

Parsing webpages

When you download one page from a website, it is in HTML format and you need to extract useful information from it. We will use beautifulsoup4 library for parsing HTML.

Parsing a webpage:

import requests

from bs4 import BeautifulSoup

text = requests.get("http://en.wikipedia.org").text

parsed = BeautifulSoup(text, 'html.parser')

Variable parsed now contains a tree of HTML elements. Since the task in our homework is to obtain user comments, we need to find an appropriate element with user comments in the HTML tree.

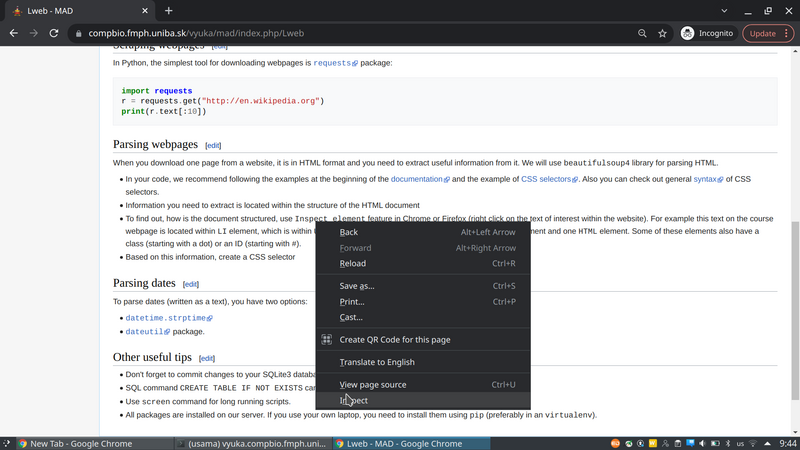

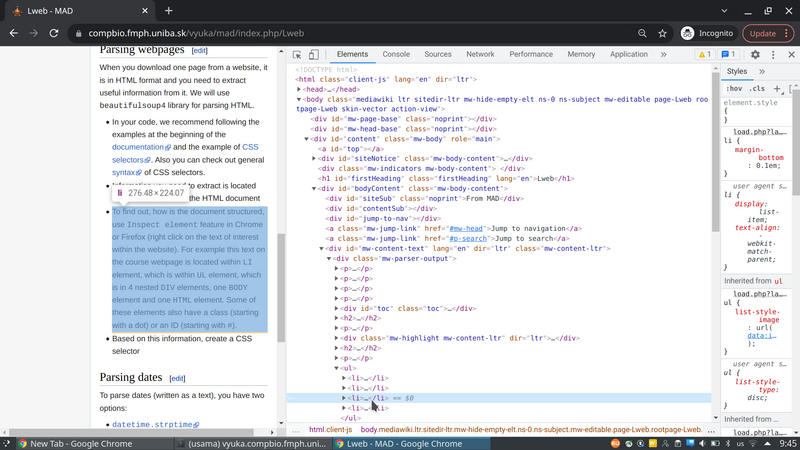

Exploring structure of HTML document

- Information you need to extract is located within the structure of the HTML document.

- To find out, how is the document structured, use Inspect element feature in Chrome or Firefox (right click on the text of interest within the website). For example this text on the course webpage is located within LI element, which is within UL element, which is in 4 nested DIV elements, one BODY element and one HTML element. Some of these elements also have a class (starting with a dot) or an ID (starting with #).

Extracting elements of interest from HTML tree

- Once we know which HTML elements we need, we can select them from the HTML tree stored in parsed variable using select method.

- Here we show several examples of finding elements of interest and extracting data from them.

To select all nodes with tag <a> you might use:

>>> links = parsed.select('a')

>>> links[1]

<a class="mw-jump-link" href="#mw-head">Jump to navigation</a>

Getting inner text from the element is done by:

>>> links[1].string

'Jump to navigation'

To select an <li> element and traverse its children, you can use the following code. Also note difference between attributes string and text (text contains text from all descendants):

>>> li = parsed.select('li')

>>> li[10]

<li><a href="/wiki/Fearless_(Taylor_Swift_album)" title="Fearless (Taylor Swift album)"><i>Fearless</i> (Taylor Swift album)</a></li>

>>> for ch in li[10].children:

... print(ch.name)

...

a

>>> ch.string

>>> ch.text

'Fearless (Taylor Swift album)'

Here are examples of more complicated selection of HTML elements.

parsed.select('li i') # tag inside a tag (not direct descendant), returns inner tag

parsed.select('li > i') # tag inside a tag (direct descendant), returns inner tag

parsed.select('li > i')[0].parent # gets the parent tag

parsed.select('.interlanguage-link-target') # select anything with class = "..." attribute

parsed.select('a.interlanguage-link-target') # select <a> tag with class = "..." attribute

parsed.select('li .interlanguage-link-target') # select <li> tag followed by anything with class = "..." attribute

parsed.select('#top') # select anything with id="top"

- In your code, we recommend following the examples at the beginning of the documentation and the example of CSS selectors. Also you can check out general syntax of CSS selectors.

Parsing dates

To parse dates (written as a text), you have two options:

>>> import datetime

>>> datetime_str = '9.10.18 13:55:26'

>>> datetime.datetime.strptime(datetime_str, '%d.%m.%y %H:%M:%S')

datetime.datetime(2018, 10, 9, 13, 55, 26)

- dateutil package. Beware that default setting prefers "month.day.year" format. This can be fixed with dayfirst flag.

>>> import dateutil.parser

>>> dateutil.parser.parse('2012-01-02 15:20:30')

datetime.datetime(2012, 1, 2, 15, 20, 30)

>>> dateutil.parser.parse('02.01.2012 15:20:30')

datetime.datetime(2012, 2, 1, 15, 20, 30)

>>> dateutil.parser.parse('02.01.2012 15:20:30', dayfirst=True)

datetime.datetime(2012, 1, 2, 15, 20, 30)

Other useful tips

- Don't forget to commit changes to your SQLite3 database (call db.commit()).

- SQL command CREATE TABLE IF NOT EXISTS can be useful at the start of your script.

- Use screen command for long-running scripts. It will keep your script running, even if you close the ssh connection (or it drops due to bad wifi).

- To get out of screen use Ctrl+A and then press D.

- To get back in write screen -r.

- More details in a separate tutorial.

- All packages are installed on our server. If you use your own laptop, you need to install them using pip (preferably in an virtualenv).