1-DAV-202 Data Management 2024/25

Difference between revisions of "Integrácia dátových zdrojov 2016/17"

(Created page with "Website for 2017/17 * Kontakt * Úvod * Pravidlá * 2017-02-21 (TV) Perl, part 1 (basics, input processing): Lecture 1, Homework 1 * 2017-02-28...") |

|||

| (2 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

Website for 2017/17 | Website for 2017/17 | ||

| − | * [[Kontakt]] | + | * [[#Kontakt]] |

| − | * [[Úvod]] | + | * [[#Úvod]] |

| − | * [[Pravidlá]] | + | * [[#Pravidlá]] |

| − | * 2017-02-21 (TV) Perl, part 1 (basics, input processing): [[L01|Lecture 1]], [[HW01|Homework 1]] | + | * 2017-02-21 (TV) Perl, part 1 (basics, input processing): [[#L01|Lecture 1]], [[#HW01|Homework 1]] |

| − | * 2017-02-28 (TV) Perl, part 2 (external commands, files, subroutines): [[L02|Lecture 2]], [[HW02|Homework 2]] | + | * 2017-02-28 (TV) Perl, part 2 (external commands, files, subroutines): [[#L02|Lecture 2]], [[#HW02|Homework 2]] |

| − | * 2017-03-07 (BB) Command-line tools, Perl one-liners: [[L03|Lecture 3]], [[HW03|Homework 3]] | + | * 2017-03-07 (BB) Command-line tools, Perl one-liners: [[#L03|Lecture 3]], [[#HW03|Homework 3]] |

| − | * 2017-03-14 (BB) Job scheduling and make [[L04|Lecture 4]], [[HW04|Homework 4]] | + | * 2017-03-14 (BB) Job scheduling and make [[#L04|Lecture 4]], [[#HW04|Homework 4]] |

| − | * 2017-03-21 (BB) R, part 1 [[L05|Lecture 5]], [[HW05|Homework 5]] | + | * 2017-03-21 (BB) R, part 1 [[#L05|Lecture 5]], [[#HW05|Homework 5]] |

| − | * 2017-03-28 (BB) R, part 2 [[L06|Lecture 6]], [[HW06|Homework 6]] | + | * 2017-03-28 (BB) R, part 2 [[#L06|Lecture 6]], [[#HW06|Homework 6]] |

| − | * 2017-04-04 (VB) Python, web crawling, HTML parsing, sqlite3 [[L07|Lecture 7]], [[HW07|Homework 7]] | + | * 2017-04-04 (VB) Python, web crawling, HTML parsing, sqlite3 [[#L07|Lecture 7]], [[#HW07|Homework 7]] |

| − | * 2017-04-11 Python and SQL for beginners (work on | + | * 2017-04-11 Python and SQL for beginners (work on HW07), consultations on project topics |

* 2017-04-18 no lecture (Easter) | * 2017-04-18 no lecture (Easter) | ||

| − | * 2017-04-25 (VB) Text data processing, flask [[L08|Lecture 8]], [[HW08|Homework 8]] | + | * 2017-04-25 (VB) Text data processing, flask [[#L08|Lecture 8]], [[#HW08|Homework 8]] |

| − | * 2017-05-02 (VB) Data visualization in JavaScript [[L09|Lecture 9]], [[HW09|Homework 9]] | + | * 2017-05-02 (VB) Data visualization in JavaScript [[#L09|Lecture 9]], [[#HW09|Homework 9]] |

| − | * 2017-05-09 (JS) Hadoop [[L10|Lecture 10]], [[HW10|Homework 10]] | + | * 2017-05-09 (JS) Hadoop [[#L10|Lecture 10]], [[#HW10|Homework 10]] |

| − | * 2017-05-16 (BB) More databases, scripting language of your choice [[L11|Lecture 11]], [[HW11|Homework 11]] | + | * 2017-05-16 (BB) More databases, scripting language of your choice [[#L11|Lecture 11]], [[#HW11|Homework 11]] |

| − | * 2017-05-23 | + | * 2017-05-23 work on projects |

| + | |||

| + | =Kontakt= | ||

| + | '''Vyučujúci''' | ||

| + | |||

| + | * [http://compbio.fmph.uniba.sk/~bbrejova/ doc. Mgr. Broňa Brejová, PhD.] miestnosť M-163 <!-- , [[Image:e-bb.png]] --> | ||

| + | * [http://compbio.fmph.uniba.sk/~tvinar/ Mgr. Tomáš Vinař, PhD.], miestnosť M-163 <!-- , [[Image:e-tv.png]] --> | ||

| + | * [http://dai.fmph.uniba.sk/w/Vladimir_Boza/sk Mgr. Vladimír Boža], miestnosť M-25 <!-- , [[Image:e-vb.png]] --> | ||

| + | * [http://dai.fmph.uniba.sk/~siska/ RNDr. Jozef Šiška, PhD.], miestnosť I-7 | ||

| + | * Konzultácie po dohode emailom | ||

| + | |||

| + | '''Rozvrh''' | ||

| + | * Utorok 14:00-16:20 M-217 | ||

| + | =Úvod= | ||

| + | ==Cieľová skupina== | ||

| + | Tento predmet je určený pre študentov 2. ročníka bakalárskeho študijného programu Bioinformatika a pre študentov bakalárskeho a magisterského študijného programu Informatika, obzvlášť ak plánujú na magisterskom štúdiu absolvovať štátnicové zameranie Bioinformatika a strojové učenie. Radi privítame aj študentov iných zameraní a študijných programov, pokiaľ majú požadované (neformálne) prerekvizity. | ||

| + | |||

| + | Predpokladáme, že študenti na tomto predmete už vedia programovať v niektorom programovacom jazyku a neboja sa učiť podľa potreby nové jazyky. Takisto predpokladáme základnú znalosť práce v Linuxe vrátane spúšťania príkazov na príkazovom riadku (mali by ste poznať aspoň základné príkazy na prácu so súbormi a adresármi ako cd, mkdir, cp, mv, rm, chmod a pod.). Hoci väčšina technológií preberaných na tomto predmete sa dá použiť na spracovanie dát z mnohých oblastí, budeme ich často ilustrovať na príkladoch z oblasti bioinformatiky. Pokúsime sa vysvetliť potrebné pojmy, ale bolo by dobre, ak by ste sa orientovali v základných pojmoch molekulárnej biológie, ako sú DNA, RNA, proteín, gén, genóm, evolúcia, fylogenetický strom a pod. Študentom zamerania Bioinformatika a strojové učenie odporúčame absolvovať najskôr Metódy v bioinformatike, až potom tento predmet. | ||

| + | |||

| + | Ak sa chcete doučiť základy používania príkazového riadku, skúste napr. tento tutoriál: http://korflab.ucdavis.edu/bootcamp.html | ||

| + | |||

| + | ==Cieľ predmetu== | ||

| + | |||

| + | Počas štúdia sa naučíte mnohé zaujímave algoritmy, modely a metódy, ktoré sa dajú použiť na spracovanie dát v bioinformatike alebo iných oblastiach. Ak však počas štúdia alebo aj neskôr v zamestnaní budete chcieť tieto metódy použiť na reálne dáta, zistíte, že väčšinou treba vynaložiť značné úsilie na samotné získanie dát, ich predspracovanie do vhodného tvaru, testovanie a porovnávanie rôznych metód alebo ich nastavení a získavanie finálnych výsledkov v tvare prehľadných tabuliek a grafov. Často je potrebné tieto činnosti veľakrát opakovať pre rôzne vstupy, rôzne nastavenia a podobne. Obzvlášť v bioinformatike je možné si nájsť zamestnanie, kde vašou hlavnou náplňou bude spracovanie dát s použitím už hotových nástrojov, prípadne doplnených menšími vlastnými programami. Na tomto predmete si ukážeme niektoré programovacie jazyky, postupy a technológie vhodné na tieto činnosti. Veľa z nich je použiteľných na dáta z rôznych oblastí, ale budeme sa venovať aj špecificky bioinformatickým nástrojom. | ||

| + | |||

| + | ==Základné princípy== | ||

| + | |||

| + | Odporúčame nasledujúci článok s dobrými radami k výpočtovým experimentom: | ||

| + | * Noble WS. [http://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1000424 A quick guide to organizing computational biology projects.] PLoS Comput Biol. 2009 Jul 31;5(7):e1000424. | ||

| + | |||

| + | Niektoré dôležité zásady: | ||

| + | * Citát z článku Noble 2009: "Everything you do, you will probably have to do over again." | ||

| + | * Dobre zdokumentujte všetky kroky experimentu (čo ste robili, prečo ste to robili, čo vám vyšlo) | ||

| + | ** Ani vy sami si o pár mesiacov tieto detaily nebudete pamätať | ||

| + | * Snažte sa udržiavať logickú štruktúru adresárov a súborov | ||

| + | ** Ak však máte veľa experimentov, môže byť dostačujúce označiť ich dátumom, nevymýšľať stále nové dlhé mená | ||

| + | * Snažte sa vyhýbať manuálnym úpravám medzivýsledkov, ktoré znemožňujú jednoduché zopakovanie experimentu | ||

| + | * Snažte sa detegovať chyby v dátach | ||

| + | ** Skripty by mali skončiť s chybovou hláškou, keď niečo nejde ako by malo | ||

| + | ** V skriptoch čo najviac kontrolujte, že vstupné dáta zodpovedajú vašim predstavám (správny formát, rozumný rozsah hodnôt atď.) | ||

| + | ** Ak v skripte voláte iný program, kontrolujte jeho exit code | ||

| + | ** Tiež čo najčastejšie kontrolujte medzivýsledky výpočtu (ručným prezeraním, výpočtom rôznych štatistík a pod.), aby ste odhalili prípadné chyby v dátach alebo vo vašom kóde | ||

| + | =Pravidlá= | ||

| + | ==Známkovanie== | ||

| + | |||

| + | * Domáce úlohy: 55% | ||

| + | * Návrh projektu: 5% | ||

| + | * Projekt: 40% | ||

| + | |||

| + | Stupnica: | ||

| + | * A: 90 a viac, B:80...89, C: 70...79, D: 60...69, E: 50...59, FX: menej ako 50% | ||

| + | |||

| + | ==Formát predmetu== | ||

| + | * Každý týždeň 3 vyučovacie hodiny, z toho cca prvá je prednáška a na ďalšie dve cvičenia. Na cvičeniach samostatne riešite príklady, ktoré doma dokončíte ako domácu úlohu. | ||

| + | * Cez skúškové obdobie budete odovzdávať projekt. Po odovzdaní projektov sa bude konať ešte diskusia o projekte s vyučujúcimi, ktorá môže ovplyvniť vaše body z projektu. | ||

| + | * Budete mať konto na Linuxovom serveri určenom pre tento predmet. Toto konto používajte len na účely tohto predmetu a snažte sa server príliš svojou aktivitou nepreťažiť, aby slúžil všetkým študentom. Akékoľvek pokusy úmyselne narušiť chod servera budú považované za vážne porušenie pravidiel predmetu. | ||

| + | |||

| + | ==Domáce úlohy== | ||

| + | * Termín DÚ týkajúcej sa aktuálnej prednášky je vždy do 9:00 v deň nasledujúcej prednášky (t.j. väčšinou o necelý týždeň od zadania). | ||

| + | * Domácu úlohu odporúčame začať robiť na cvičení, kde vám môžeme prípadne poradiť. Ak máte otázky neskôr, pýtajte sa vyučujúcich emailom. | ||

| + | * Domácu úlohu môžete robiť na ľubovoľnom počítači, pokiaľ možno pod Linuxom. Odovzdaný kód alebo príkazy by však mali byť spustiteľné na serveri pre tento predmet, nepoužívajte teda špeciálny softvér alebo nastavenia vášho počítača. | ||

| + | * Domáca úloha sa odovzdáva nakopírovaním požadovaných súborov do požadovaného adresára na serveri. Konkrétne požiadavky budú spresnené v zadaní. | ||

| + | * Ak sú mená súborov špecifikované v zadaní, dodržujte ich. Ak ich vymýšľate sami, nazvite ich rozumne. V prípade potreby si spravte aj podadresáre, napr. na jednotlivé príklady. | ||

| + | * Dbajte na prehľadnosť odovzdaného zdrojového kódu (odsadzovanie, rozumné názvy premenných, podľa potreby komentáre) | ||

| + | |||

| + | ===Protokoly=== | ||

| + | * Väčšinou bude požadovanou súčasťou úlohy textový dokument nazvaný protokol. | ||

| + | * Protokol môže byť vo formáte .txt alebo .pdf a jeho meno má byť '''protocol.pdf''' alebo '''protocol.txt''' (nakopírujte ho do odovzdaného adresára) | ||

| + | * Protokol môže byť po slovensky alebo po anglicky. | ||

| + | * V prípade použitia txt formátu a diakritiky ju kódujte v UTF8, ale pre jednoduchosť môžete protokoly písať aj bez diakritiky. Ak je protocol v pdf formáte, mali by sa v ňom dať selektovať texty. | ||

| + | * Vo väčšine úloh dostanete kostru protokolu, dodržujte ju. | ||

| + | |||

| + | '''Hlavička protokolu, vyhodnotenie''' | ||

| + | * Na vrchu protokolu uveďte meno, číslo domácej úluhy a vaše vyhodnotenie toho, ako sa vám úlohu podarilo vyriešiť. Vyhodnotenie je prehľadný zoznam všetkých príkladov zo zadania, ktoré ste aspoň začali riešiť a kódov označujúcich ich stupeň dokončenia: | ||

| + | ** kód HOTOVO uveďte, ak si myslíte, že tento príklad máte úplne a správne vyriešený | ||

| + | ** kód ČASŤ uveďte, ak ste nevyriešili príklad celý a do poznámky za kód stručne uveďte, čo máte hotové a čo nie, prípadne ktorými časťami si nie ste istí. | ||

| + | ** kód MOŽNO uveďte, ak príklad máte celý, ale nie ste si istí, či správne. Opäť v poznámke uveďte, čím si nie ste istí. | ||

| + | ** kód NIČ uveďte, ak ste príklad ani nezačali riešiť | ||

| + | * Vaše vyhodnotenie je pre nás pomôckou pri bodovaní. Príklady označené HOTOVO budeme kontrolovať námatkovo, k príkladom označeným MOŽNO sa vám pokúsime dať nejakú spätnú väzbu, takisto aj k príkladom označeným ČASŤ, kde v poznámke vyjadríte, že ste mali nejaké problémy. | ||

| + | * Pri vyhodnotení sa pokúste čo najlepšie posúdiť správnosť vašich riešení, pričom kvalita vášho seba-hodnotenia môže vplývať na celkový počet bodov. | ||

| + | |||

| + | '''Obsah protokolu''' | ||

| + | * Ak nie je v zadaní určené inak, protokol by mal obsahovať nasledovné údaje: | ||

| + | ** '''Zoznam odovzdaných súborov:''' o každom súbore uveďte jeho význam a či ste ho vyrobili ručne, získali z externých zdrojov alebo vypočítali nejakým programom. Ak máte väčšie množstvo súborov so systematickým pomenovaním, stačí vysvetliť schému názvov všeobecne. Súbory, ktorých mená sú špecifikované v zadaní, nemusíte v zozname uvádzať. | ||

| + | ** '''Postupnosť všetkých spustených príkazov,''' prípadne iných krokov, ktorými ste dospeli k získaným výsledkom. Tu uvádzajte príkazy na spracovanie dát a spúšťanie vašich či iných programov. Netreba uvádzať príkazy súvisiace so samotným programovaním (spúšťanie editora, nastavenie práv na spustenie a pod.), s kopírovaním úlohy na server a pod. Uveďte aj stručné '''komentáre''', čo bolo účelom určitého príkazu alebo skupiny príkazov. | ||

| + | ** '''Zoznam zdrojov:''' (webstránky a pod., ktoré ste pri riešení úlohy použili. Nemusíte uvádzať webstránku predmetu a zdroje odporučené priamo v zadaní. | ||

| + | Celkovo by protokol mal umožniť čitateľovi zorientovať sa vo vašich súboroch a tiež v prípade záujmu vykonať rovnaké výpočty, akými ste dospeli vy k výsledku. Nemusíte písať slohy, stačia zrozumiteľné a prehľadné heslovité poznámky. | ||

| + | |||

| + | ==Projekty== | ||

| + | |||

| + | Cieľom projektu je vyskúšať si naučené zručnosti na konkrétnom projekte spracovania dát. Vašou úlohou je zohnať si dáta, tieto dáta analyzovať niektorými technikami z prednášok, prípadne aj inými technológiami a získané výsledky zobraziť v prehľadných grafoch a tabuľkách. Ideálne je, ak sa vám podarí prísť k zaujímavým alebo užitočným záverom, ale hodnotiť budeme hlavne voľbu vhodného postupu a jeho technickú náročnosť. Rozsah samotného programovania alebo analýzy dát by mal zodpovedať zhruba dvom domácim úlohám, ale celkovo bude projekt náročnejší, lebo na rozdiel od úloh nemáte postup a dáta vopred určené, ale musíte si ich vymyslieť sami a nie vždy sa prvý nápad ukáže ako správny. V projekte môžete využiť aj existujúce nástroje a knižnice, ale pokiaľ možno používajte nástroje spúšťané na príkazovom riadku. | ||

| + | |||

| + | Zhruba v dvoch tretinách semestra budete odovzdávať '''návrh projektu''' (formát txt alebo pdf, rozsah 0.5-1 strana). V tomto návrhu uveďte, aké dáta budete spracovávať, ako ich zoženiete, čo je cieľom analýzy a aké technológie plánujete použiť. Ciele a technológie môžete počas práce na projekte mierne pozmeniť podľa okolností, mali by ste však mať počiatočnú predstavu. K návrhu vám dáme spätnú väzbu, pričom v niektorých prípadoch môže byť potrebné tému mierne alebo úplne zmeniť. Za načas odovzdaný vhodný návrh projektu získate 5% z celkovej známky. Návrh odporúčame pred odovzdaním konzultovať s vyučujúcimi. | ||

| + | |||

| + | Cez skúškové obdobie bude určený termín '''odovzdania projektu'''. Podobne ako pri domácich úlohách odovzdávajte adresár s požadovanými súbormi: | ||

| + | * Vaše '''programy a súbory s dátami''' (veľmi veľké dátové súbory vynechajte) | ||

| + | * '''Protokol''' podobne ako pri domácich úlohách | ||

| + | ** formát txt alebo pdf, stručné heslovité poznámky | ||

| + | ** obsahuje zoznam súborov, podrobný postup pri analýze dát (spustené príkazy), ako aj použité zdroje (dáta, programy, dokumentácia a iná literatúra atď) | ||

| + | * '''Správu k projektu''' vo formáte pdf. Na rozdiel od menej formálneho protokolu by správu mal tvoriť súvislý text v odbornom štýle, podobne ako napr. záverečné práce. Môžete písať po slovensky alebo po anglicky, ale pokiaľ možno gramaticky správne. Správa by mala mať tieto časti: | ||

| + | ** úvod, v ktorom vysvetlíte ciele projektu, prípadne potrebné poznatky zo skúmanej oblasti a aké dáta ste mali k dispozícii | ||

| + | ** stručný popis metód, v ktorom neuvádzajte detailne jednotlivé kroky, skôr prehľad použitého prístupu a jeho zdôvodnenie | ||

| + | ** výsledky analýzy (tabuľky, grafy a pod.) a popis týchto výsledkov, prípadne aké závery sa z nich dajú spraviť (nezabudnite vysvetliť, čo znamenajú údaje v tabuľkách, osi grafov a pod.). Okrem finálnych výsledkov analýzy uveďte aj čiastkové výsledky, ktorými ste sa snažili overovať, že pôvodné dáta a jednotlivé časti vášho postupu sa správajú rozumne. | ||

| + | ** diskusiu, v ktorej uvediete, ktoré časti projektu boli náročné a na aké problémy ste narazili, kde sa vám naopak podarilo nájsť spôsob, ako problém vyriešiť jednoducho, ktoré časti projektu by ste spätne odporúčali robiť iným než vašim postupom, čo ste sa na projekte naučili a podobne | ||

| + | |||

| + | Projekty môžete robiť aj vo '''dvojici''', vtedy však vyžadujeme rozsiahlejší projekt a každý člen by mal byť primárne zodpovedný za určitú časť projektu, čo uveďte aj v správe. Dvojice odovzdávajú jednu správu, ale po odovzdaní projektu majú stretnutie s vyučujúcimi individuálne. | ||

| + | |||

| + | Ako nájsť tému projektu: | ||

| + | * Môžete spracovať nejaké dáta, ktoré potrebujete do bakalárskej alebo diplomovej práce, prípadne aj dáta, ktoré potrebujte na iný predmet (v tom prípade uveďte v správe, o aký predmet ide a takisto upovedomte aj druhého vyučujúceho, že ste použili spracovanie dát ako projekt pre tento predmet). Obzvlášť pre BIN študentov môže byť tento predmet vhodnou príležitosťou nájsť si tému bakalárskej práce a začať na nej pracovať. | ||

| + | * Môžete skúsiť zopakovať analýzu spravenú v nejakom vedeckom článku a overiť, že dostanete tie isté výsledky. Vhodné je tiež skúsiť analýzu aj mierne obmeniť (spustiť na iné dáta, zmeniť nejaké nastavenia, zostaviť aj iný typ grafu a pod.) | ||

| + | * Môžete skúsiť nájsť niekoho, kto má dáta, ktoré by potreboval spracovať, ale nevie ako na to (môže ísť o biológov, vedcov z iných oblastí, ale aj neziskové organizácie a pod.) V prípade, že takýmto spôsobom kontaktujete tretie osoby, bolo by vhodné pracovať na projekte obzvlášť zodpovedne, aby ste nerobili zlé meno našej fakulte. | ||

| + | * V projekte môžete porovnávať niekoľko programov na tú istú úlohu z hľadiska ich rýchlosti či presnosti výsledkov. Obsahom projektu bude príprava dát, na ktorých budete programy bežať, samotné spúšťanie (vhodne zoskriptované) ako aj vyhodnotenie výsledkov. | ||

| + | * A samozrejme môžete niekde na internete vyhrabať zaujímavé dáta a snažiť sa z nich niečo vydolovať. | ||

| + | |||

| + | ==Opisovanie== | ||

| + | |||

| + | * Máte povolené sa so spolužiakmi a ďalšími osobami rozprávať o domácich úlohách resp. projektoch a stratégiách na ich riešenie. Kód, získané výsledky aj text, ktorý odovzdáte, musí však byť vaša samostatná práca. Je zakázané ukazovať svoj kód alebo texty spolužiakom. | ||

| + | |||

| + | * Pri riešení domácej úlohy a projektu očakávame, že budete využívať internetové zdroje, najmä rôzne manuály a diskusné fóra k preberaným technológiám. Nesnažte sa však nájsť hotové riešenia zadaných úloh. Všetky použité zdroje uveďte v domácich úlohách a projektoch. | ||

| + | |||

| + | * Ak nájdeme prípady opisovania alebo nepovolených pomôcok, všetci zúčastnení študenti získajú za príslušnú domácu úlohu, projekt a pod. nula bodov (t.j. aj tí, ktorí dali spolužiakom odpísať) a prípad ďalej podstúpime na riešenie disciplinárnej komisii fakulty. | ||

| + | |||

| + | ==Zverejňovanie== | ||

| + | |||

| + | Zadania a materiály k predmetu sú voľne prístupné na tejto stránke. Prosím vás ale, aby ste nezverejňovali ani inak nešírili vaše riešenia domácich úloh, ak nie je v zadaní povedané inak. Vaše projekty môžete zverejniť, pokiaľ to nie je v rozpore s vašou dohodou so zadávateľom projektu a poskytovateľom dát. | ||

| + | =L01= | ||

| + | =Lecture 1: Perl, part 1= | ||

| + | |||

| + | ==Why Perl== | ||

| + | * From [https://en.wikipedia.org/wiki/Perl Wikipedia:] It has been nicknamed "the Swiss Army chainsaw of scripting languages" because of its flexibility and power, and possibly also because of its "ugliness". | ||

| + | |||

| + | Oficial slogans: | ||

| + | * There's more than one way to do it | ||

| + | * Easy things should be easy and hard things should be possible | ||

| + | |||

| + | Advantages | ||

| + | * Good capabilities for processing text files, regular expressions, running external programs etc. | ||

| + | * Closer to common programming language than shell scripts | ||

| + | * Perl one-liners on the command line can replace many other tools such as sed and awk | ||

| + | * Many existing libraries | ||

| + | |||

| + | Disadvantages | ||

| + | * Quirky syntax | ||

| + | * It is easy to write very unreadable programs (sometimes joking called write-only language) | ||

| + | * Quite slow and uses a lot of memory. If possible do no read entire input to memory, process line by line | ||

| + | |||

| + | Warning: we will use Perl 5, Perl 6 is quite a different language | ||

| + | |||

| + | ==Sources of Perl-related information== | ||

| + | * In package perl-doc man pages: | ||

| + | ** '''man perlintro''' introduction to Perl | ||

| + | ** '''man perlfunc''' list of standard functions in Perle | ||

| + | ** '''perldoc -f split''' describes function split, similarly other functions | ||

| + | ** '''perldoc -q sort''' shows answers to commonly asked questions (FAQ) | ||

| + | ** '''man perlretut''' and '''man perlre''' regular expressions | ||

| + | ** '''man perl''' list of other manual pages about Perl | ||

| + | * The same content on the web http://perldoc.perl.org/ | ||

| + | * Various web tutorials e.g. [http://www.perl.com/pub/a/2000/10/begperl1.html this one] | ||

| + | * Books | ||

| + | ** Simon Cozens: Beginning Perl [http://www.perl.org/books/beginning-perl/] freely downloadable | ||

| + | ** Larry Wall et al: Programming Perl [http://oreilly.com/catalog/9780596000271/] classics, Camel book | ||

| + | * '''Bioperl''' [http://www.bioperl.org/wiki/Main_Page] big library for bioinformatics | ||

| + | * Perl for Windows: http://strawberryperl.com/ | ||

| + | |||

| + | ==Hello world== | ||

| + | It is possible to run the code directly from a command line (more later): | ||

| + | <pre> | ||

| + | perl -e'print "Hello world\n"' | ||

| + | </pre> | ||

| + | |||

| + | This is equivalen to the following code stored in a file: | ||

| + | <pre> | ||

| + | #! /usr/bin/perl -w | ||

| + | use strict; | ||

| + | print "Hello world!\n"; | ||

| + | </pre> | ||

| + | |||

| + | * First line is a path to the interpreter | ||

| + | * Swith -w switches warnings on, e.g. if we manipulate with an undefined value (equivalen to "use warnings;") | ||

| + | * Second line <tt>use strict</tt> will switch on a more strict syntax checks, e.g. all variables must be defined | ||

| + | * Use of -w and use strict is strongly recommended | ||

| + | |||

| + | * Store the program in a file, e.g. <tt>hello.pl</tt> | ||

| + | * Make it executable (<tt>chmod a+x hello.pl</tt>) | ||

| + | * Run it with command <tt>./hello.pl</tt> | ||

| + | * Also possible to run as <tt>perl hello.pl</tt> (e.g. if we don't have the path to the interpreter in the file or the executable bit set) | ||

| + | |||

| + | ==The first input file for today: sequence repeats== | ||

| + | * In genomes some sequences occur in many copies (often not exactly equal, only similar) | ||

| + | * We have downloaded a table containing such sequence repeats on chromosome 2L of the fruitfly Drosophila melanogaster | ||

| + | * It was done as follows: on webpage http://genome.ucsc.edu/ we select drosophila genome, then in main menu select Tools, Table browser, select group: variation and repeats, track: ReapatMasker, region: position chr2L, output format: all fields from the selected table a output file: repeats.txt | ||

| + | * Each line of the file contains data about one repeat in the selected chromosome. The first line contains column names. Columns are tab-separated. Here are the first two lines: | ||

| + | <pre> | ||

| + | #bin swScore milliDiv milliDel milliIns genoName genoStart genoEnd genoLeft strand repName repClass repFamily repStart repEnd repLeft id | ||

| + | 585 778 167 7 20 chr2L 1 154 -23513558 + HETRP_DM Satellite Satellite 1519 1669 -203 1 | ||

| + | </pre> | ||

| + | * The file can be found at our server under filename <tt>/tasks/hw01/repeats.txt</tt> (17185 lines) | ||

| + | * A small randomly selected subset of the table rows is in file <tt>/tasks/hw01/repeats-small.txt</tt> (159 lines) | ||

| + | |||

| + | ==A sample Perl program== | ||

| + | For each type of repeat (column 11 of the file when counting from 0) we want to compute the number of repeats of this type | ||

| + | <pre> | ||

| + | #!/usr/bin/perl -w | ||

| + | use strict; | ||

| + | |||

| + | #associative array (hash), with repeat type as key | ||

| + | my %count; | ||

| + | |||

| + | while(my $line = <STDIN>) { # read every line on input | ||

| + | chomp $line; # delete end of line, if any | ||

| + | |||

| + | if($line =~ /^#/) { # skip commented lines | ||

| + | next; # similar to "continue" in C, move to next iteration | ||

| + | } | ||

| + | |||

| + | # split the input line to columns on every tab, store them in an array | ||

| + | my @columns = split "\t", $line; | ||

| + | |||

| + | # check input - should have at least 17 columns | ||

| + | die "Bad input '$line'" unless @columns >= 17; | ||

| + | |||

| + | my $type = $columns[11]; | ||

| + | |||

| + | # increase counter for this type | ||

| + | $count{$type}++; | ||

| + | } | ||

| + | |||

| + | # write out results, types sorted alphabetically | ||

| + | foreach my $type (sort keys %count) { | ||

| + | print $type, " ", $count{$type}, "\n"; | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | This program does the same thing as the following one-liner (more on one-liners in two weeks) | ||

| + | <pre> | ||

| + | perl -F'"\t"' -lane 'next if /^#/; die unless @F>=17; $count{$F[11]}++; END { foreach (sort keys %count) { print "$_ $count{$_}" }}' filename | ||

| + | </pre> | ||

| + | |||

| + | ==Variables, types== | ||

| + | |||

| + | ===Scalar variables=== | ||

| + | * Scalar variables start with $, they can hold undefined value (<tt>undef</tt>), string, number, reference etc. | ||

| + | * Perl converts automatically between strings and numbers | ||

| + | <pre> | ||

| + | perl -e'print((1 . "2")+1, "\n")' | ||

| + | 13 | ||

| + | perl -e'print(("a" . "2")+1, "\n")' | ||

| + | 1 | ||

| + | perl -we'print(("a" . "2")+1, "\n")' | ||

| + | Argument "a2" isn't numeric in addition (+) at -e line 1. | ||

| + | 1 | ||

| + | </pre> | ||

| + | * If we switch on strict parsing, each variable needs to be defined by my, several variables created and initialized as follows: <tt>my ($a,$b) = (0,1);</tt> | ||

| + | * Usual set of C-style [http://perldoc.perl.org/perlop.html operators], power is **, string concatenation . | ||

| + | * Numbers compared by <, <=, ==, != etc., strings by lt, le, eq, ne, gt, ge, | ||

| + | * Comparison operator $a cmp $b for strings, $a <=> $b for numbers: returns -1 if $a<$b, 0 if they are equal, +1 if $a>$b | ||

| + | |||

| + | ===Arrays=== | ||

| + | * Names start with @, e.g. @a | ||

| + | * Access to element 0 in array: $a[0] | ||

| + | ** Starts with $, because the expression as a whole is a scalar value | ||

| + | * Length of array <tt>scalar(@a)</tt>. In scalar context, @a is the same thing. | ||

| + | ** e.g. <tt>for(my $i=0; $i<@a; $i++) { ... }</tt> | ||

| + | * If using non-existent indexes, they will be created, initialized to undef (++, += treat undef as 0) | ||

| + | * Stack/vector using functions push and pop: push @a, (1,2,3); $x = pop @a; | ||

| + | * Analogicaly shift and unshift on the left end of the array (slower) | ||

| + | * Sorting | ||

| + | ** @a = sort @a; (sorts alphabetically) | ||

| + | ** @a = sort {$a <=> $b} @a; (sort numerically) | ||

| + | ** { } can contain arbitrary comparison function, $a and $b are the two compared elements | ||

| + | * Array concatenation @c = (@a,@b); | ||

| + | * Swap values of two variables: ($x,$y) = ($y,$x); | ||

| + | * Iterate through values of an array (values can be changed): | ||

| + | <pre> | ||

| + | perl -e'my @a = (1,2,3); foreach my $val (@a) { $val++; } print join(" ", @a), "\n";' | ||

| + | 2 3 4 | ||

| + | </pre> | ||

| + | |||

| + | ===Associative array (hashes)=== | ||

| + | * Names start with %, e.g. %b | ||

| + | * Access elemtn with name "X": $b{"X"} | ||

| + | * Write out all elements of associative array %b | ||

| + | <pre> | ||

| + | foreach my $key (keys %b) { | ||

| + | print $key, " ", $b{$key}, "\n"; | ||

| + | } | ||

| + | </pre> | ||

| + | * Inicialization with constant: %b = ("key1"=>"value1","key2"=>"value2") | ||

| + | ** instead of => you can also use , | ||

| + | * test for existence of a key: if(exists $a{"x"}) {...} | ||

| + | * (other methods will create the queried key with undef value) | ||

| + | |||

| + | ===Multidimensional arrays, fun with pointers=== | ||

| + | * Pointer to a variable: \$a, \@a, \%a | ||

| + | * Pointer to an anonymous array: [1,2,3], pointer to an anonymous hash: {"kluc1"=>"hodnota1"} | ||

| + | * Hash of lists: | ||

| + | <pre> | ||

| + | my %a = ("fruits"=>["apple","banana","orange"], "vegetables"=>["celery","carrot"]} | ||

| + | $x = $a{"fruits"}[1]; | ||

| + | push @{$a{"fruits"}}, "kiwi"; | ||

| + | my $aref = \%a; | ||

| + | $x = $aref->{"fruits"}[1]; | ||

| + | </pre> | ||

| + | * Module Data::Dumper has function Dumper, which will recursively print complex data structures | ||

| + | |||

| + | ==Strings, regular expressions== | ||

| + | ===Strings=== | ||

| + | * Substring: <tt>[http://perldoc.perl.org/functions/substr.html substr]($string, $start, $length)</tt> | ||

| + | ** used also to access individual charaters (use length 1) | ||

| + | ** If we omit $length, considers until the end of the string, negative start counted from the end of the stringzaciatok rata od konca,... | ||

| + | ** We can also used to replace a substring by something else: <tt>substr($str, 0, 1) = "aaa"</tt> (replaces the first character by "aaa") | ||

| + | * Length of a string: <tt>[http://perldoc.perl.org/functions/length.html length]($str)</tt> | ||

| + | * Splitting a string to parts: <tt>[http://perldoc.perl.org/functions/split.html split] reg_expression, $string, $max_number_of_parts</tt> | ||

| + | ** if " " instead of regular expression, splits at whitespace | ||

| + | * Connecting parts <tt>[http://perldoc.perl.org/functions/join.html join]($separator, @strings)</tt> | ||

| + | * Other useful functions: <tt>[http://perldoc.perl.org/functions/chomp.html chomp]</tt> (removes end of line), <tt>[http://perldoc.perl.org/functions/index.html index]</tt> (finds a substring), lc, uc (conversion to lowercase/uppercase), reverse (mirror image), sprintf (C-style formatting) | ||

| + | |||

| + | ===Regular expressions=== | ||

| + | * more in [http://perldoc.perl.org/perlretut.html] | ||

| + | <pre> | ||

| + | $line =~ s/\s+$//; # remove whitespace at the end of the line | ||

| + | $line =~ s/[0-9]+/X/g; # replace each sequence of numbers with character X | ||

| + | |||

| + | #from the name of the fasta sequence (starting with >) create a string until the first space | ||

| + | #(\S means non-whitespace), the result is stored in $1, as specified by () | ||

| + | if($line =~ /^\>(\S+)/) { $name = $1; } | ||

| + | |||

| + | perl -le'$X="123 4 567"; $X=~s/[0-9]+/X/g; print $X' | ||

| + | X X X | ||

| + | </pre> | ||

| + | |||

| + | ==Conditionals, loops== | ||

| + | <pre> | ||

| + | if(expression) { # [] and () cannot be omitted | ||

| + | commands | ||

| + | } elsif(expression) { | ||

| + | commands | ||

| + | } else { | ||

| + | commands | ||

| + | } | ||

| + | |||

| + | command if expression; # here () not necessary | ||

| + | command unless expression; | ||

| + | die "negative value of x: $x" unless $x>=0; | ||

| + | |||

| + | for(my $i=0; $i<100; $i++) { | ||

| + | print $i, "\n"; | ||

| + | } | ||

| + | |||

| + | foreach my $i (0..99) { | ||

| + | print $i, "\n"; | ||

| + | } | ||

| + | |||

| + | $x=1; | ||

| + | while(1) { | ||

| + | $x *= 2; | ||

| + | last if $x>=100; | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | * Undefined value, number 0 and strings "" and "0" evaluate as false, but I would recommmend always explicitly using logical values in conditional expressions, e.g. if(defined $x), if($x eq ""), if($x==0) etc. | ||

| + | |||

| + | ==Input, output== | ||

| + | * Reading one line from standard input: <tt>$line = <STDIN></tt> | ||

| + | * If no more input data available, returns undef | ||

| + | * See also [http://perldoc.perl.org/perlop.html#I%2fO-Operators] | ||

| + | * Special idiom <tt>while(my $line = <STDIN>)</tt> equivalent to <tt>while (defined(my $line = <STDIN>))</tt> | ||

| + | ** iterates through all lines of input | ||

| + | * <tt>chomp $line</tt> removes "\n", if any from the end of the string | ||

| + | * output to stdout through <tt>[http://perldoc.perl.org/functions/print.html print]</tt> or <tt>[http://perldoc.perl.org/functions/printf.html printf]</tt> | ||

| + | |||

| + | ==The second input file for today: DNA sequencing reads (fastq)== | ||

| + | |||

| + | * DNA sequencing machines can read only short pieces of DNA called reads | ||

| + | * Reads are usually stored in [https://en.wikipedia.org/wiki/FASTQ_format fastq format] | ||

| + | * Files can be very large (gigabytes or more), but we will use only a small sample from bacteria Staphylococcus aureus, source [http://gage.cbcb.umd.edu/data/Staphylococcus_aureus/Data.original/] | ||

| + | * Each read is on 4 lines: | ||

| + | ** line 1: ID of the read and other description, line starts with @ | ||

| + | ** line 2: DNA sequence, A,C,G,T are bases (nucleotides) of DNA, N means unknown base | ||

| + | ** line 3: + | ||

| + | ** line 4: quality string, which is the string of the same length as DNA in line 2. Each character represents quality of one base in DNA. If p is the probability that this base is wrong, the quality string will contain character with ASCII value 33+(-10 log p), where log is decimal logarithm. This means that higher ASCII means base of higher quality. Character ! (ASCII 33) means probability 1 of error, character $ (ASCII 36) means 50% error, character + (ASCII 43) is 10% error, character 5 (ASCII 53) is 1% error. | ||

| + | ** Note that some sequencing platforms represent qualities differently (see article linked above) | ||

| + | * Our file has all reads of equal length (this is not always the case) | ||

| + | |||

| + | The first 4 reads from file /tasks/hw01/reads-small.fastq | ||

| + | <pre> | ||

| + | @SRR022868.1845/1 | ||

| + | AAATTTAGGAAAAGATGATTTAGCAACATTTAGCCTTAATGAAAGACCAGATTCTGTTGCCATGTTTGAATGCCTTAAACCAGTAGCAGAATCAGTATAAA | ||

| + | + | ||

| + | IICIIIIIIIIIID%IIII8>I8III1II,II)I+III*II<II,E;-HI>+I0IB99I%%2GI*=?5*&1>'$0;%'+%%+;#'$&'%%$-+*$--*+(% | ||

| + | @SRR022868.1846/1 | ||

| + | TAGCGTTGTAAAATAAATTTCTAGAATGGAAGTGATGATATTGAAATACACTCAGATCCTGAATGAAAGATTTATTAAAGTTAAGACGAGAGTCTCATTAT | ||

| + | + | ||

| + | 4CIIIIIIII52I)IIIII0I16IIIII2IIII;IIAII&I6AI+*+&G5&G.@8/6&%&,03:*.$479.91(9--$,*&/3"$#&*'+#&##&$(&+&+ | ||

| + | </pre> | ||

| + | |||

| + | And now start on [[#HW01]] | ||

| + | =HW01= | ||

| + | See [[#L01|Lecture 1]] | ||

| + | |||

| + | ==Files== | ||

| + | We have 4 input files for this homework. We recommend creating soft links to your working directory as follows: | ||

| + | <pre> | ||

| + | ln -s /tasks/hw01/repeats-small.txt . # small version of the repeat file | ||

| + | ln -s /tasks/hw01/repeats.txt . # full version of the repeat file | ||

| + | ln -s /tasks/hw01/reads-small.fastq . # smaller version of the read file | ||

| + | ln -s /tasks/hw01/reads.fastq . # bigger version of the read file | ||

| + | </pre> | ||

| + | |||

| + | We recommend writing your protocol starting from an outline provided in <tt>/tasks/hw01/protocol.txt</tt> | ||

| + | |||

| + | ==Submitting== | ||

| + | * Directory /submit/hw01/your_username will be created for you | ||

| + | * Copy required files to this directory, including the protocol named protocol.txt or protocol.pdf | ||

| + | * You can modify these files freely until deadline, but after the deadline of the homework, you will lose access rights to this directory | ||

| + | |||

| + | ==Task A== | ||

| + | |||

| + | * Consider the program for counting repeat types in the [[#L01|lecture 1]], save it to file <tt>repeat-stat.pl</tt> | ||

| + | * Extend it to compute the average length of each type of repeat | ||

| + | ** Each row of the input table contains the start and end coordinates of the repeat in columns 7 and 6. The length is simply the difference of these two values. | ||

| + | * Output a table with three columns: type of repeat, the number of occurrences, the average length of the repeat. | ||

| + | ** Use printf to print these three items right-justified in columns of sufficient width, print the average length to 1 decimal place. | ||

| + | * If you run your script on the small file, the output should look something like this (exact column widths may differ): | ||

| + | <pre> | ||

| + | ./repeat-stat.pl < repeats-small.txt | ||

| + | DNA 5 377.4 | ||

| + | LINE 4 410.2 | ||

| + | LTR 13 355.4 | ||

| + | Low_complexity 22 47.2 | ||

| + | RC 8 236.2 | ||

| + | Simple_repeat 106 39.0 | ||

| + | </pre> | ||

| + | * Include in your '''protocol''' the output when you run your script on the large file: <tt>./repeat-stat.pl < repeats.txt</tt> | ||

| + | * Find out on [https://en.wikipedia.org/wiki/Retrotransposon Wikipedia], what acronyms LINE and LTR stand for. Do their names correspond to their lengths? (Write a short answer in the '''protocol'''.) | ||

| + | * '''Submit''' only your script, <tt>repeat-stat.pl</tt> | ||

| + | |||

| + | ==Task B== | ||

| + | |||

| + | * Write a script which reformats FASTQ file to FASTA format, call it <tt>fastq2fasta.pl</tt> | ||

| + | ** fastq file should be on standard input, fasta file written to standard output | ||

| + | * [https://en.wikipedia.org/wiki/FASTA_format FASTA format] is a typical format for storing DNA and protein sequences. | ||

| + | ** Each sequence consists of several lines of the file. The first line starts with ">" followed by identifier of the sequence and optinally some further description separated by whitespace | ||

| + | ** The sequence itself is on the second line, long sequences are split into multiple lines | ||

| + | * In our case, the name of the sequence will be the ID of the read with @ replaced by > and / replaced by _ | ||

| + | ** you can try to use [http://perldoc.perl.org/perlop.html#Quote-Like-Operators tr or s operators] | ||

| + | * For example, the first two reads of reads.fastq are: | ||

| + | <pre> | ||

| + | @SRR022868.1845/1 | ||

| + | AAATTTAGGAAAAGATGATTTAGCAACATTTAGCCTTAATGAAAGACCAGATTCTGTTGCCATGTTTGAATGCCTTAAACCAGTAGCAGAATCAGTATAAA | ||

| + | + | ||

| + | IICIIIIIIIIIID%IIII8>I8III1II,II)I+III*II<II,E;-HI>+I0IB99I%%2GI*=?5*&1>'$0;%'+%%+;#'$&'%%$-+*$--*+(% | ||

| + | @SRR022868.1846/1 | ||

| + | TAGCGTTGTAAAATAAATTTCTAGAATGGAAGTGATGATATTGAAATACACTCAGATCCTGAATGAAAGATTTATTAAAGTTAAGACGAGAGTCTCATTAT | ||

| + | + | ||

| + | 4CIIIIIIII52I)IIIII0I16IIIII2IIII;IIAII&I6AI+*+&G5&G.@8/6&%&,03:*.$479.91(9--$,*&/3"$#&*'+#&##&$(&+&+ | ||

| + | </pre> | ||

| + | * These should be reformatted as follows: | ||

| + | <pre> | ||

| + | >SRR022868.1845_1 | ||

| + | AAATTTAGGAAAAGATGATTTAGCAACATTTAGCCTTAATGAAAGACCAGATTCTGTTGCCATGTTTGAATGCCTTAAACCAGTAGCAGAATCAGTATAAA | ||

| + | >SRR022868.1846_1 | ||

| + | TAGCGTTGTAAAATAAATTTCTAGAATGGAAGTGATGATATTGAAATACACTCAGATCCTGAATGAAAGATTTATTAAAGTTAAGACGAGAGTCTCATTAT | ||

| + | </pre> | ||

| + | * '''Submit''' files <tt>fastq2fasta.pl</tt> and <tt>reads-small.fasta</tt> | ||

| + | ** the latter file is created by running <tt>./fastq2fasta.pl < reads-small.fastq > reads-small.fasta</tt> | ||

| + | |||

| + | ==Task C== | ||

| + | |||

| + | * Write a script <tt>fastq-quality.pl</tt> which for each position in a read computes the average quality | ||

| + | * Standard input has fastq file with multiple reads, possibly of different lengths | ||

| + | * As quality we will use simply ASCII values of characters in the quality string with value 33 substracted, so the quality is -10 log p | ||

| + | ** ASCII value can be computed by function [http://perldoc.perl.org/functions/ord.html ord] | ||

| + | * Positions in reads will be numbered from 0 | ||

| + | * Since reads can differ in length, some positions are used in more reads, some in fewer | ||

| + | * For each position from 0 up to the highest position used in some read, print three numbers separated by tabs "\t": the position index, the number of times this position was used in reads, average quality at that position with 1 decimal place (you can again use printf) | ||

| + | * The last two lines when you run <tt>./fastq-quality.pl < reads-small.fastq</tt> should be | ||

| + | <pre> | ||

| + | 99 86 5.5 | ||

| + | 100 86 8.6 | ||

| + | </pre> | ||

| + | * Run the following command, which runs your script on the larger file and selects every 10th position. Include the output in your '''protocol'''. Do you see any trend in quality values with increasing position? (Include a short comment in '''protocol'''.) | ||

| + | <pre> | ||

| + | ./fastq-quality.pl < reads.fastq | perl -lane 'print if $F[0]%10==0' | ||

| + | </pre> | ||

| + | * '''Submit''' only <tt>fastq-quality.pl</tt> | ||

| + | |||

| + | ==Task D== | ||

| + | |||

| + | * Write script <tt>fastq-trim.pl</tt> that trims low quality bases from the end of each read and filters out short reads | ||

| + | * This script should read a fastq file from standard input and write trimmed fastq file to standard output | ||

| + | * It should also accept two command-line arguments: character Q and integer L | ||

| + | ** We have not covered processing command line arguments, but you can use the code snippet below for this | ||

| + | * Q is the minimum acceptable quality (characters from quality string with ASCII value >= ASCII value of Q are ok) | ||

| + | * L is the minimum acceptable length of a read | ||

| + | * First find the last base in a read which has quality at least Q (if any). All bases after this base will be removed from both sequence and quality string | ||

| + | * If the resulting read has fewer than L bases, it is omitted from the output | ||

| + | |||

| + | You can check your program by the following tests: | ||

| + | * If you run the following two commands, you should get tmp identical with input and thus output of diff should be empty | ||

| + | <pre> | ||

| + | ./fastq-trim.pl '!' 101 < reads-small.fastq > tmp # trim at quality ASCII >=33 and length >=101 | ||

| + | diff reads-small.fastq tmp # output should be empty (no differences) | ||

| + | </pre> | ||

| + | |||

| + | * If you run the following two commands, you should see differences in 4 reads, 2 bases trimmed from each | ||

| + | <pre> | ||

| + | ./fastq-trim.pl '"' 1 < reads-small.fastq > tmp # trim at quality ASCII >=34 and length >=1 | ||

| + | diff reads-small.fastq tmp # output should be differences in 4 reads | ||

| + | </pre> | ||

| + | |||

| + | * If you run the following commands, you should get empty output (no reads meet the criteria): | ||

| + | <pre> | ||

| + | ./fastq-trim.pl d 1 < reads-small.fastq # quality ASCII >=100, length >= 1 | ||

| + | ./fastq-trim.pl '!' 102 < reads-small.fastq # quality ASCII >=33 and length >=102 | ||

| + | </pre> | ||

| + | |||

| + | Further runs and submitting | ||

| + | * Run <tt>./fastq-trim.pl '(' 95 < reads-small.fastq > reads-small-filtered.fastq # quality ASCII >= 40</tt> | ||

| + | * '''Submit''' files <tt>fastq-trim.pl</tt> and <tt>reads-small-filtered.fastq</tt> | ||

| + | * If you have done task C, run quality statistics on a trimmed version of the bigger file using command below and include in the '''protocol''' the result. Comment in the '''protocol''' on differences between statistics on the whole file in part C and D. Are they as you expected? | ||

| + | <pre> | ||

| + | ./fastq-trim.pl 2 50 < reads.fastq | ./fastq-quality.pl | perl -lane 'print if $F[0]%10==0' # quality ASCII >= 50 | ||

| + | </pre> | ||

| + | * Note: you have created tools which can be combined, e.g. you can create quality-trimmed version of the fasta file by first trimming fastq and then converting to fasta (no need to submit these files) | ||

| + | |||

| + | Parsing command-line arguments in this task (they will be stored in variables $Q and $L): | ||

| + | <pre> | ||

| + | #!/usr/bin/perl -w | ||

| + | use strict; | ||

| + | |||

| + | my $USAGE = " | ||

| + | Usage: | ||

| + | $0 Q L < input.fastq > output.fastq | ||

| + | |||

| + | Trim from the end of each read bases with ASCII quality value less | ||

| + | than the given threshold Q. If the length of the read after trimming | ||

| + | is less than L, the read will be omitted from output. | ||

| + | |||

| + | L is a non-negative integer, Q is a character | ||

| + | "; | ||

| + | |||

| + | # check that we have exactly 2 command-line arguments | ||

| + | die $USAGE unless @ARGV==2; | ||

| + | # copy command-line arguments to variables Q and L | ||

| + | my ($Q, $L) = @ARGV; | ||

| + | # check that $Q is one character and $L looks like a non-negative integer | ||

| + | die $USAGE unless length($Q)==1 && $L=~/^[0-9]+$/; | ||

| + | </pre> | ||

| + | =L02= | ||

| + | ==Motivation: Building Phylogenetic Trees== | ||

| + | The task for today will be to build a [https://en.wikipedia.org/wiki/Phylogenetic_tree phylogenetic tree] of several species using sequences of several genes. | ||

| + | * A phylogenetic tree is a tree showing evolutionary history of these species. Leaves are target present-day species, internal nodes are their common ancestors. | ||

| + | * Input contains sequences of genes from each species. | ||

| + | * Step 1: Identify ''ortholog groups''. Orthologs are genes from different species that "correspond" to each other. This is done based on sequence similarity and we can use a tool called [http://blast.ncbi.nlm.nih.gov/Blast.cgi?CMD=Web&PAGE_TYPE=BlastDocs&DOC_TYPE=Download blast] to identify sequence similarities between individual genes. The result of ortholog group identification will be a set of genes, each gene having one sequence from each of the 6 species | ||

| + | chimp_94013 dog_84719 human_15749 macaque_34640 mouse_17461 rat_09232 | ||

| + | * Step 2: For each ortholog group, we need to align genes and build a phylogenetic tree for this gene using existing methods. We can do this using tools muscle (for alignment) and phyml (for phylogenetic tree inference). | ||

| + | |||

| + | Unaligned sequences: | ||

| + | >mouse | ||

| + | ATGCAGTTCCCGCACCCGGGGCCCGCGGCTGCGCCCGCCGTGGGAGTCCCGCTGTATGCG | ||

| + | >rat | ||

| + | ATGCAGTTCCCGCACCCGGGGCCCGCGGCTGCGCCCGCCGTCGGAGTCCCGCTGTACGCG | ||

| + | >dog | ||

| + | ATGCAGTACCACCCCGGGCCGGCGGCGGCGGGCGCCGTGGGGGTGCCGCTGTACGCG | ||

| + | >human | ||

| + | ATGCAGTACCCGCACCCCGGGCCGGCGGCGGGCGCCGTGGGGGTGCCGCTGTACGCG | ||

| + | >chimp | ||

| + | ATGCAGTACCCGCACCCCGGGCCGGCGGCGGGCGCCGTGGGGGTGCCGCTGTACGCG | ||

| + | >macaque | ||

| + | ATGCAGTACCCGCACCCCGGGCGGCGGCCGTGGGGGTGGC | ||

| + | |||

| + | Aligned sequences: | ||

| + | >mouse | ||

| + | ATGCAGTTCCCGCACCCGGGGCCCGCGGCTGCGCCCGCCGTGGGAGTCCCGCTGTATGCG | ||

| + | >rat | ||

| + | ATGCAGTTCCCGCACCCGGGGCCCGCGGCTGCGCCCGCCGTCGGAGTCCCGCTGTACGCG | ||

| + | >dog | ||

| + | ATGCAGTAC---CACCCCGGGCCGGCGGCGGCGGGCGCCGTGGGGGTGCCGCTGTACGCG | ||

| + | >human | ||

| + | ATGCAGTACCCGCACCCCGGGC---CGGCGGCGGGCGCCGTGGGGGTGCCGCTGTACGCG | ||

| + | >chimp | ||

| + | ATGCAGTACCCGCACCCCGGGC---CGGCGGCGGGCGCCGTGGGGGTGCCGCTGTACGCG | ||

| + | >macaque | ||

| + | ATGCAGTACCCGCACCCCGGGC----------GGCGGCCGTGGGGGTGGC---------- | ||

| + | |||

| + | Phylogenetic tree: | ||

| + | (mouse:0.03240286,rat:0.01544553,(dog:0.03632419,(macaque:0.01505050,(human:0.00000001,chimp:0.00000001):0.00627957):0.01396920):0.10645019); | ||

| + | |||

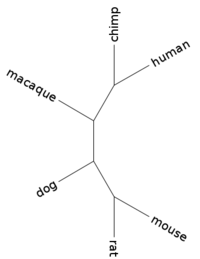

| + | [[Image:L02 human 15749.png|center|thumb|200px|Tree for gene human_15749 (branch lengths ignored)]] | ||

| + | |||

| + | |||

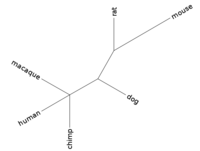

| + | * Step 3: The result of the previous step will be several trees, one for every gene. Ideally, all trees would be identical, showing the real evolutionary history of the six species. But it is not easy to infer the real tree from sequence data, so trees from different genes might differ. Therefore, in the last step, we will build a consensus tree. This can be done by usina interactive tool called phylip. | ||

| + | * Output is a single consensus tree. | ||

| + | |||

| + | <table> | ||

| + | <tr><td>[[Image:L02 human 15749.png|thumb|200px|Tree for gene human_15749]]</td> | ||

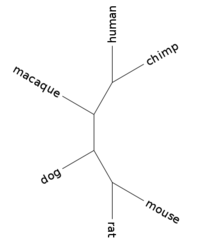

| + | <td>[[Image:L02 human 13531.png|thumb|200px|Tree for gene human_13531]]</td> | ||

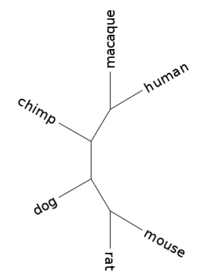

| + | <td>[[Image:L02 human 31770.png|thumb|200px|Tree for gene human_31770]]</td></tr><tr> | ||

| + | <td>[[Image:L02 consensus.png|thumb|200px|Strict consensus for the three gene trees]]</td> | ||

| + | </tr></table> | ||

| + | |||

| + | |||

| + | Our goal for today is to build a pipeline that automates the whole task. | ||

| + | |||

| + | ==Opening files== | ||

| + | <pre> | ||

| + | my $in; | ||

| + | open $in, "<", "path/file.txt" or die; # open file for reading | ||

| + | while(my $line = <$in>) { | ||

| + | # process line | ||

| + | } | ||

| + | close $in; | ||

| + | |||

| + | my $out; | ||

| + | open $out, ">", "path/file2.txt" or die; # open file for writing | ||

| + | print $out "Hello world\n"; | ||

| + | close $out; | ||

| + | # if we want to append to a file use the following instead: | ||

| + | # open $out, ">>", "cesta/subor2.txt" or die; | ||

| + | |||

| + | # standard files | ||

| + | print STDERR "Hello world\n"; | ||

| + | my $line = <STDIN>; | ||

| + | # files as arguments of a function | ||

| + | citaj_subor($in); | ||

| + | citaj_subor(\*STDIN); | ||

| + | </pre> | ||

| + | |||

| + | ==Working with files and directories== | ||

| + | Working directories or files with automatically generated names are automagically deleted after the program finishes. | ||

| + | <pre> | ||

| + | use File::Temp qw/tempdir/; | ||

| + | my $dir = tempdir("atoms_XXXXXXX", TMPDIR => 1, CLEANUP => 1 ); | ||

| + | print STDERR "Creating temporary directory $dir\n"; | ||

| + | open $out,">$dir/myfile.txt" or die; | ||

| + | </pre> | ||

| + | |||

| + | Copying files | ||

| + | <pre> | ||

| + | use File::Copy; | ||

| + | copy("file1","file2") or die "Copy failed: $!"; | ||

| + | copy("Copy.pm",\*STDOUT); | ||

| + | move("/dev1/fileA","/dev2/fileB"); | ||

| + | </pre> | ||

| + | Other functions for working with file system, e.g. chdir, mkdir, unlink, chmod, ... | ||

| + | |||

| + | Function glob finds files with wildcard characters similarly as on command line (see also opendir, readdir, and File::Find module) | ||

| + | <pre> | ||

| + | ls *.pl | ||

| + | perl -le'foreach my $f (glob("*.pl")) { print $f; }' | ||

| + | </pre> | ||

| + | |||

| + | Additional functions for working with file names, paths, etc. in modules File::Spec and File::Basename. | ||

| + | |||

| + | Testing for an existence of a file (more in [http://perldoc.perl.org/functions/-X.html perldoc -f -X]) | ||

| + | <pre> | ||

| + | if(-r "file.txt") { ... } # is file.txt readable? | ||

| + | if(-d "dir") {.... } # is dir a directory? | ||

| + | </pre> | ||

| + | |||

| + | ==Running external programs== | ||

| + | <pre> | ||

| + | my $ret = system("command arguments"); | ||

| + | # returns -1 if cannot run command, otherwise pass the return code | ||

| + | </pre> | ||

| + | |||

| + | <pre> | ||

| + | my $allfiles = `ls`; | ||

| + | # returns the result of a command as a text | ||

| + | # cannot test return code | ||

| + | </pre> | ||

| + | |||

| + | Using pipes | ||

| + | <pre> | ||

| + | open $in, "ls |"; | ||

| + | while(my $line = <$in>) { ... } | ||

| + | </pre> | ||

| + | |||

| + | <pre> | ||

| + | open $out, "| wc"; | ||

| + | print $out "1234\n"; | ||

| + | close $out;' | ||

| + | |||

| + | 1 1 5 | ||

| + | </pre> | ||

| + | |||

| + | ==Command-line arguments== | ||

| + | <pre> | ||

| + | # module for processing options in a standardized way | ||

| + | use Getopt::Std; | ||

| + | # string with usage manual | ||

| + | my $USAGE = "$0 [options] length filename | ||

| + | |||

| + | Options: | ||

| + | -l switch on lucky mode | ||

| + | -o filename write output to filename | ||

| + | "; | ||

| + | |||

| + | # all arguments to the command are stored in @ARGV array | ||

| + | # parse options and remove them from @ARGV | ||

| + | my %options; | ||

| + | getopts("lo:", \%options); | ||

| + | # now there should be exactly two arguments in @ARGV | ||

| + | die $USAGE unless @ARGV==2; | ||

| + | # process options | ||

| + | my ($length, $filenamefile) = @ARGV; | ||

| + | # values of options are in the %options array | ||

| + | if(exists $options{'l'}) { print "Lucky mode\n"; } | ||

| + | </pre> | ||

| + | For long option names, see module Getopt::Long | ||

| + | |||

| + | ==Defining functions== | ||

| + | |||

| + | Defining new functions | ||

| + | <pre> | ||

| + | sub function_name { | ||

| + | # arguments are stored in @_ array | ||

| + | my ($firstarg, $secondarg) = @_; | ||

| + | # do something | ||

| + | return ($result, $second_result); | ||

| + | } | ||

| + | </pre> | ||

| + | * Arrays and hashes are usually passed as references: function_name(\@array, \%hash); | ||

| + | * It is advantageous to pass long string as references as well to prevent needless copying: function_name(\$sequence); | ||

| + | * References need to be dereferenced, e.g. substr($$sequence) or $array->[0] | ||

| + | |||

| + | ==Bioperl== | ||

| + | <pre> | ||

| + | use Bio::Tools::CodonTable; | ||

| + | sub translate | ||

| + | { | ||

| + | my ($seq, $code) = @_; | ||

| + | my $CodonTable = Bio::Tools::CodonTable->new( -id => $code); | ||

| + | my $result = $CodonTable->translate($seq); | ||

| + | |||

| + | return $result; | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | |||

| + | ==Defining modules== | ||

| + | Module with name XXX should be in file XXX.pm. | ||

| + | <pre> | ||

| + | package shared; | ||

| + | |||

| + | BEGIN { | ||

| + | use Exporter (); | ||

| + | our (@ISA, @EXPORT, @EXPORT_OK); | ||

| + | @ISA = qw(Exporter); | ||

| + | # symbols to export by default | ||

| + | @EXPORT = qw(funkcia1, funkcia2); | ||

| + | } | ||

| + | |||

| + | sub funkcia1 { | ||

| + | ... | ||

| + | } | ||

| + | |||

| + | sub funkcia2 { | ||

| + | ... | ||

| + | } | ||

| + | |||

| + | #module must return true | ||

| + | 1; | ||

| + | </pre> | ||

| + | |||

| + | Using the module located in the same directory as .pl file: | ||

| + | <pre> | ||

| + | use FindBin qw($Bin); # $Bin is the directory with the script | ||

| + | use lib "$Bin"; # add bin to the library path | ||

| + | use shared; | ||

| + | </pre> | ||

| + | =HW02= | ||

| + | |||

| + | ==Biological background and overall approach== | ||

| + | The task for today will be to build a [https://en.wikipedia.org/wiki/Phylogenetic_tree phylogenetic tree] of several species using sequences of several genes. | ||

| + | * We will use 6 mammals: human, chimp, macaque, mouse, rat and dog | ||

| + | * A phylogenetic tree is a tree showing evolutionary history of these species. Leaves are target present-day species, internal nodes are their common ancestors. | ||

| + | * There are methods to build trees by comparing DNA or protein sequences of several present-day species. | ||

| + | * Our input contains a small selection of gene sequences from each species. In a real project we would start from all genes (cca 20,000 per species) and would do a careful filtration of problematic sequences, but we skip this step here. | ||

| + | * The first step will be to identify which genes from different species "correspond" to each other. More exactly, we are looking for groups of ''orthologs''. To do so, we will use a simple method based on sequence similarity, see details below. Again, in real project, more complex methods might be used. | ||

| + | * The result of ortholog group identification will be a set of genes, each gene having one sequence from each of the 6 species | ||

| + | * Next we will process each gene separately, aligning them and building a phylogenetic tree for this gene using existing methods. | ||

| + | * The result of the previous step will be several trees, one for every gene. Ideally, all trees would be identical, showing the real evolutionary history of the six species. But it is not easy to infer the real tree from sequence data, so trees from different genes might differ. Therefore, in the last step, we will build a consensus tree. | ||

| + | |||

| + | ==Technical overview== | ||

| + | |||

| + | This task can be organized in different ways, but to practice Perl, we will write a single Perl script which takes as an input a set of fasta files, each containing DNA sequences of several genes from a single species and writes on output the resulting consensus tree. | ||

| + | * For most of the steps, we will use existing bioinformatics tools. The script will run these tools and do some additional simple processing. | ||

| + | |||

| + | '''Temporary directory''' | ||

| + | * During its run, the script and various tools will generate many files. All these files will be stored in a single temporary directory which can be then easily deleted by the user. | ||

| + | * We will use Perl library [http://perldoc.perl.org/File/Temp.html File::Temp] to create this temporary directory with a unique name so that the script can be run several times simultaneously without clashing filenames. | ||

| + | * The library by default creates the file in /tmp, but instead we will create it in the current directory so that it is not deleted at restart of the computer and so that it can be more easily inspected for any problems | ||

| + | * The library by default deletes the directory when the script finishes but again, to allow inspection by the user, we will leave the directory in place | ||

| + | |||

| + | '''Restart''' | ||

| + | * The script will have a command line option for restarting the computation and omitting the time-consuming steps that were already finished | ||

| + | * This is useful in long-running scripts because during development of the script you will want to run it many times as you add more steps. In real usage the computation can also be interrupted for various reasons. | ||

| + | * Our restart capabilities will be quite rudimentary: before running a potentially slow external program, the script will check if the temporary directory contains a non-empty file with the filename matching the expected output of the program. If the file is found, it is assumed to be correct and complete and the external program is not run. | ||

| + | |||

| + | '''Command line options''' | ||

| + | * The script should be named build-tree.pl and as command-line arguments, it will get names of the species | ||

| + | ** For example, we can run the script as follows: <tt>./build-tree.pl human chimp macaque mouse rat dog</tt> | ||

| + | ** The first species, in this case human, will be so called reference species (see task A) | ||

| + | ** The script needs at least 2 species, otherwise it will write an error message and stop | ||

| + | ** For each species X there should be a file X.fa in the current directory, this is also checked by the script | ||

| + | * Restart is specified by command line option -r followed by the name of temporary directory | ||

| + | * Command-line option handling and creation of temporary directory is already implemented in the script you are given. | ||

| + | |||

| + | '''Input files''' | ||

| + | * Each input fasta X.fa file contains DNA sequences of several genes from one species X | ||

| + | * Each sequence name on a line starting with > will contain species name, underscore and gene id, e.g. ">human_00008" | ||

| + | * Species name matches name of the file, gene id is unique within the fasta file | ||

| + | * Species names and gene ids do not contain underscore, whitespace or any other special characters | ||

| + | * Sequence of each gene can be split into several lines | ||

| + | |||

| + | ==Files and submitting== | ||

| + | |||

| + | In /tasks/hw02/ you will find the following files: | ||

| + | * 6 fasta files (*.fa) | ||

| + | * skeleton script build-tree.pl | ||

| + | ** This script already contains handling of command line options, entire task B, potentially useful functions my_run and my_delete and suggested function headers for individual tasks. Feel free to change any of this. | ||

| + | * outline of protocol protocol.txt | ||

| + | * directory example with files for two different groups of genes | ||

| + | Copy the files to your directory and continue writing the script | ||

| + | |||

| + | Submitting | ||

| + | * Submit the script, protocol protocol.txt or protocol.pdf and temporary directory with all files created in the run of your script on all 6 species with human as reference. | ||

| + | * Since the commands and names of files are specified in the homework, you do not need to write them in the protocol (unless you change them). Therefore it is sufficient if the protocol contains self-assessment and any used information sources other than those linked from this assignment or lectures. | ||

| + | * Submit by copying to /submit/hw02/your_username | ||

| + | |||

| + | ==Task A: run blast to find similar sequences== | ||

| + | * To find orthologs, we use a simple method by first finding local alignments (regions of sequence similarity) between genes from different species | ||

| + | * For finding alignments, we will use tool [http://blast.ncbi.nlm.nih.gov/Blast.cgi?CMD=Web&PAGE_TYPE=BlastDocs&DOC_TYPE=Download blast] (ubuntu package blast2) | ||

| + | * Example of running blast: | ||

| + | |||

| + | formatdb -p F -i human.fa | ||

| + | blastall -p blastn -m 9 -d human.fa -i mouse.fa -e 1e-5 | ||

| + | |||

| + | * Example of output file: | ||

| + | |||

| + | # BLASTN 2.2.26 [Sep-21-2011] | ||

| + | # Query: mouse_00492 | ||

| + | # Database: human.fa | ||

| + | # Fields: Query id, Subject id, % identity, alignment length, mismatches, gap openings, q. start, q. end, s. start, s. end, e-value, bit score | ||

| + | mouse_22930 human_00008 90.79 1107 102 0 1 1107 1 1107 0.0 1386 | ||

| + | mouse_22930 human_34035 80.29 350 69 0 745 1094 706 1055 3e-37 147 | ||

| + | mouse_22930 human_34035 79.02 143 30 0 427 569 391 533 8e-07 46.1 | ||

| + | |||

| + | (note last column - score) | ||

| + | |||

| + | * For each non-reference ''species'', save the result of blast search in file ''species''.blast in the temporary directory. | ||

| + | |||

| + | ==Task B: find orthogroups== | ||

| + | '''This part is already implemented in the skeleton file, you don't need to implement or report anything in this task''' | ||

| + | * Here, we process all the '''species'''.blast files to find ortholog groups. | ||

| + | * Matches are symmetric, and there can be multiple matches for the same gene. We are looking for '''reciprocal best hits''': pairs of genes human_A and mouse_B, where mouse_B is the match with the highest score in mouse for human_A and human_A is the best-scoring match in human for mouse_B. | ||

| + | * Some genes in reference species may have no reciprocal best hits in some of the non-reference species. | ||

| + | * Gene in the reference species and all of its reciprocal best hits constitute '''orthogroup'''. If the size of an orthogroup is the same as the number of species, we will call it a '''complete orthogroup''' | ||

| + | * In file genes.txt in temporary directory list we will list all orthogroups, one per line. | ||

| + | chimp_94013 dog_84719 human_15749 macaque_34640 mouse_17461 rat_09232 | ||

| + | chimp_61053 human_18570 macaque_12627 | ||

| + | chimp_41364 human_19217 macaque_88256 rat_82436 | ||

| + | |||

| + | ==Task C: create a file for each orthogroup== | ||

| + | * For each complete orthogroup, we will create a fasta file with corresponding DNA sequences. | ||

| + | * The file will be located in temporary directory and will be named ''genename''.fa, where ''genename'' is the name of the orthogroup gene from reference species. | ||

| + | * The fasta name for each sequence is the name of species, NOT the name of the gene. | ||

| + | >human | ||

| + | CTGCGGCTGAGAGAGATGTGTACACTGGGGACGCACTCCGGATCTGCATAGTGACCAAAGAGGGCATCAGGGAGGAAACTGTTTCCTTAAGGAAGGAC | ||

| + | >chimp | ||

| + | TGCGGCTGAGAGAGATGTGTACACTGGGGACGCACTCCGGATCTGCATAGTGACCAAAGAGGGCATCAGGGAGGAGACTGTTTCCTTAAGGAAGGAC | ||

| + | >macaque | ||

| + | CTGCGGCTGAGAGAGACGTGTACACTGGGGACGCGCTCCGGATCTGCATAGTGACCAAAGAGGGCATCAGGGAGGAGACTGTTCCCTTAAGGAAGGAC | ||

| + | >mouse | ||

| + | CAGCCGAGAGGGATGTGTATACTGGAGATGCTCTCAGGATCTGCATCGTGACCAAAGAGGGCATCAGGGAGGAAACTGTTCCCCTGCGGAAAGAC | ||

| + | >rat | ||

| + | CAGCCGAGAGGGATGTGTACACTGGAGACGCCCTCAGGATCTGCATCGTGACCAAAGAGGGCATCAGGGAGGAGACTGTTCCCCTTCGGAAAGAC | ||

| + | >dog | ||

| + | GAGGGATGTGTACACTGGGGATGCACTCAGAATCTGCATTGTGACTAAGGAGGGCATCAGGGAGGAGACTGTTCCCCTGAGGAAGGAT | ||

| + | |||

| + | ==Task D: build tree for each gene== | ||

| + | * For each orthogroup, we need to build a phylogenetic tree. | ||

| + | * The result for file ''genename''.fa should be saved in file ''genename''.tree | ||

| + | * Example of how to do this: | ||

| + | # create multiple alignment of the sequences | ||

| + | muscle -diags -in genename.fa -out genename.mfa | ||

| + | # change format of the multiple alignment | ||

| + | readseq -f12 genename.mfa -o=genename.phy -a | ||

| + | # run phylogenetic inferrence program | ||

| + | phyml -i genename.phy --datatype nt --bootstrap 0 --no_memory_check | ||

| + | # rename the result | ||

| + | mv genename.phy_phyml_tree.txt genename.tree | ||

| + | * You can view the multiple alignment (*.mfa and *.phy) by using program seaview | ||

| + | * You can view the resulting tree (*.tree) by using program njplot or figtree | ||

| + | |||

| + | ==Task E: build consensus tree== | ||

| + | * Trees built on individual genes can differ from each other. | ||

| + | * Therefore we build a '''consensus tree''': tree that only contains branches present in most gene trees; other branches are collapsed. | ||

| + | * phylip is an "interactive" program for manipulation of trees. Specific command for [http://evolution.genetics.washington.edu/phylip/doc/consense.html building consensus trees] is | ||

| + | phylip consense | ||

| + | * input file for phylip needs to contain all trees of which consensus should be built, one per line | ||

| + | * text you would type to phylip manually, can be instead passed on the standard input from the script | ||

| + | * store the output tree from phylip in all_trees.consensus in temporary directory and also print it to standard output | ||

| + | =L03= | ||

| + | Today: using command-line tools and Perl one-liners. | ||

| + | * We will do simple transformations of text files using command-line tools without writing any scripts or longer programs. | ||

| + | * You will record the commands used in your protocol | ||

| + | ** We strongly recommend making a log of commands for data processing also outside of this course | ||

| + | * If you have a log of executed commands, you can easily execute them again by copy and paste | ||

| + | * For this reason any comments are best preceded by <tt>#</tt> | ||

| + | * If you use some sequence of commands often, you can turn it into a script | ||

| + | |||

| + | Most commands have man pages or are described within <tt>man bash</tt> | ||

| + | |||

| + | ==Efficient use of command line== | ||

| + | |||

| + | Some tips for bash shell: | ||

| + | * use ''tab'' key to complete command names, path names etc | ||

| + | ** tab completion can be customized [https://www.debian-administration.org/article/316/An_introduction_to_bash_completion_part_1] | ||

| + | * use ''up'' and ''down'' keys to walk through history of recently executed commands, then edit and resubmit chosen command | ||

| + | * press ''ctrl-r'' to search in the history of executed commands | ||

| + | * at the end of session, history stored in <tt>~/.bash_history</tt> | ||

| + | * command <tt>history -a</tt> appends history to this file right now | ||

| + | ** you can then look into the file and copy appropriate commands to your protocol | ||

| + | * various other history tricks, e.g. special variables [http://samrowe.com/wordpress/advancing-in-the-bash-shell/] | ||

| + | * <tt>cd -</tt> goes to previously visited directory, also see <tt>pushd</tt> and <tt>popd</tt> | ||

| + | * <tt>ls -lt | head</tt> shows 10 most recent files, useful for seeing what you have done last | ||

| + | |||

| + | Instead of bash, you can use more advanced command-line environments, e.g. [http://ipython.org/notebook.html iPhyton notebook] | ||

| + | |||

| + | ==Redirecting and pipes== | ||

| + | |||

| + | <pre> | ||

| + | # redirect standard output to file | ||

| + | command > file | ||

| + | |||

| + | # append to file | ||

| + | command >> file | ||

| + | |||

| + | # redirect standard error | ||

| + | command 2>file | ||

| + | |||

| + | # redirect file to standard input | ||

| + | command < file | ||

| + | |||

| + | # do not forget to quote > in other uses | ||

| + | grep '>' sequences.fasta | ||

| + | # (without quotes rewrites sequences.fasta) | ||

| + | |||

| + | # send stdout of command1 to stdin of command2 | ||

| + | command1 | command2 | ||

| + | |||

| + | # backtick operator executes command, | ||

| + | # removes trailing \n from stdout, substitutes to command line | ||

| + | # the following commands do the same thing: | ||

| + | head -n 2 file | ||

| + | head -n `echo 2` file | ||

| + | |||

| + | # redirect a string in ' ' to stdin of command head | ||

| + | head -n 2 <<< 'line 1 | ||

| + | line 2 | ||

| + | line 3' | ||

| + | |||

| + | # in some commands, file argument can be taken from stdin if denoted as - or stdin | ||

| + | # the following compares uncompressed version of file1 with file2 | ||

| + | zcat file1.gz | diff - file2 | ||

| + | </pre> | ||

| + | |||

| + | Make piped commands fail properly: | ||

| + | <pre> | ||

| + | set -o pipefail | ||

| + | </pre> | ||

| + | If set, the return value of a pipeline is the value of the last (rightmost) command to exit with a non-zero status, or zero if all commands in the pipeline exit successfully. This option is disabled by default, pipe then returns exit status of the rightmost command. | ||

| + | |||

| + | ==Text file manipulation== | ||

| + | ===Commands echo and cat (creating and printing files)=== | ||

| + | <pre> | ||

| + | # print text Hello and end of line to stdout | ||

| + | echo "Hello" | ||

| + | # interpret backslash combinations \n, \t etc: | ||

| + | echo -e "first line\nsecond\tline" | ||

| + | # concatenate several files to stdout | ||

| + | cat file1 file2 | ||

| + | </pre> | ||

| + | |||

| + | ===Commands head and tail (looking at start and end of files)=== | ||

| + | <pre> | ||

| + | # print 10 first lines of file (or stdin) | ||

| + | head file | ||

| + | some_command | head | ||

| + | # print the first 2 lines | ||

| + | head -n 2 file | ||

| + | # print the last 5 lines | ||

| + | tail -n 5 file | ||

| + | # print starting from line 100 (line numbering starts at 1) | ||

| + | tail -n +100 file | ||

| + | # print lines 81..100 | ||

| + | head -n 100 file | tail -n 20 | ||

| + | </pre> | ||

| + | * Docs: [http://www.gnu.org/software/coreutils/manual/html_node/head-invocation.html head], [http://www.gnu.org/software/coreutils/manual/html_node/tail-invocation.html tail] | ||

| + | |||

| + | ===Commands wc, ls -lh, od (exploring file stats and details)=== | ||

| + | <pre> | ||

| + | # prints three numbers: number of lines (-l), number of words (-w), number of bytes (-c) | ||

| + | wc file | ||

| + | |||

| + | # prints size of file in human-readable units (K,M,G,T) | ||

| + | ls -lh file | ||

| + | |||

| + | # od -a prints file or stdout with named characters | ||

| + | # allows checking whitespace and special characters | ||

| + | echo "hello world!" | od -a | ||

| + | # prints: | ||

| + | # 0000000 h e l l o sp w o r l d ! nl | ||

| + | # 0000015 | ||

| + | </pre> | ||

| + | * Docs: [http://www.gnu.org/software/coreutils/manual/html_node/wc-invocation.html wc], [http://www.gnu.org/software/coreutils/manual/html_node/ls-invocation.html ls], [http://www.gnu.org/software/coreutils/manual/html_node/od-invocation.html od] | ||

| + | |||

| + | ===Command grep (getting lines in files or stdin matching a regular expression)=== | ||

| + | <pre> | ||

| + | # -i ignores case (upper case and lowercase letters are the same) | ||

| + | grep -i chromosome file | ||

| + | # -c counts the number of matching lines in each file | ||

| + | grep -c '^[12][0-9]' file1 file2 | ||

| + | |||

| + | # other options (there is more, see the manual): | ||

| + | # -v print/count not matching lines (inVert) | ||

| + | # -n show also line numbers | ||

| + | # -B 2 -A 1 print 2 lines before each match and 1 line after match | ||

| + | # -E extended regular expressions (allows e.g. |) | ||

| + | # -F no regular expressions, set of fixed strings | ||

| + | # -f patterns in a file | ||

| + | # (good for selecting e.g. only lines matching one of "good" ids) | ||

| + | </pre> | ||

| + | * docs: [http://www.gnu.org/software/grep/manual/grep.html grep] | ||

| + | |||

| + | ===Commands sort, uniq=== | ||

| + | <pre> | ||

| + | # some useful options of sort: | ||

| + | # -g numeric sort | ||

| + | # -k which column(s) to use as key | ||

| + | # -r reverse (from largest values) | ||

| + | # -s stable | ||

| + | # -t fiels separator | ||

| + | |||

| + | # sorting first by column 2 numerically, in case of ties use column 1 | ||

| + | sort -k 1 file | sort -g -s -k 2,2 | ||

| + | |||

| + | # uniq outputs one line from each group of consecutive identical lines | ||

| + | # uniq -c adds the size of each group as the first column | ||

| + | # the following finds all unique lines and sorts them by frequency from most frequent | ||

| + | sort file | uniq -c | sort -gr | ||

| + | </pre> | ||