1-DAV-202 Data Management 2023/24

Previously 2-INF-185 Data Source Integration

Difference between revisions of "Data management 2023/24"

| Line 3: | Line 3: | ||

{| | {| | ||

|- | |- | ||

| − | | 2024-02-22 || (BB) || ALL || Introduction to the course || [[Contact]], [[Introduction]], [[Rules]], [[Connecting to server]], [[Editors]], [[Command-line basics]] | + | | 2024-02-22 || (BB) || ALL || Introduction to the course || [[#Contact]], [[#Introduction]], [[#Rules]], [[#Connecting to server]], [[#Editors]], [[#Command-line basics]] |

|- | |- | ||

| − | | || || || Introduction to Perl || [[Lperl|Lecture]], [[HWperl|Homework]] | + | | || || || Introduction to Perl || [[#Lperl|Lecture]], [[#HWperl|Homework]] |

|- | |- | ||

| − | | 2024-02-29 || (BB) || ALL || Command-line tools, Perl one-liners || [[Lbash|Lecture]], [[HWbash|Homework]] | + | | 2024-02-29 || (BB) || ALL || Command-line tools, Perl one-liners || [[#Lbash|Lecture]], [[#HWbash|Homework]] |

|- | |- | ||

| − | | 2024-03-07 || (TV) || ALL || Job scheduling and make || [[Lmake|Lecture]], [[HWmake|Homework]] | + | | 2024-03-07 || (TV) || ALL || Job scheduling and make || [[#Lmake|Lecture]], [[#HWmake|Homework]] |

|- | |- | ||

| − | | 2024-03-14 || (TV) || ALL || SQL (and Python) for beginners || [[Project]], [[Lsql|Lecture]], [[Python]], [[HWsql|Homework]] | + | | 2024-03-14 || (TV) || ALL || SQL (and Python) for beginners || [[#Project]], [[#Lsql|Lecture]], [[#Python]], [[#HWsql|Homework]] |

|- | |- | ||

| − | | 2024-03-21 || (VB) || INF/DAV || Python, web crawling, HTML parsing, sqlite3 || [[Lweb|Lecture]], [[HWweb|Homework]], [[Screen]] | + | | 2024-03-21 || (VB) || INF/DAV || Python, web crawling, HTML parsing, sqlite3 || [[#Lweb|Lecture]], [[#HWweb|Homework]], [[#Screen]] |

|- | |- | ||

| − | | || (TV) || BIN || Bioinformatics 1 (sequencing and genome assembly) || [[Lbioinf1|Lecture]], [[HWbioinf1|Homework]] | + | | || (TV) || BIN || Bioinformatics 1 (sequencing and genome assembly) || [[#Lbioinf1|Lecture]], [[#HWbioinf1|Homework]] |

|- | |- | ||

| 2024-03-28 || || || Easter | | 2024-03-28 || || || Easter | ||

|- | |- | ||

| − | | 2024-04-04 || (VB) || INF/DAV || Text data processing, flask || [[Lflask|Lecture]], [[HWflask|Homework]] | + | | 2024-04-04 || (VB) || INF/DAV || Text data processing, flask || [[#Lflask|Lecture]], [[#HWflask|Homework]] |

|- | |- | ||

| − | | || (TV) || BIN || Bioinformatics 2 (gene finding, RNA-seq) || [[Lbioinf2|Lecture]], [[HWbioinf2|Homework]] | + | | || (TV) || BIN || Bioinformatics 2 (gene finding, RNA-seq) || [[#Lbioinf2|Lecture]], [[#HWbioinf2|Homework]] |

|- | |- | ||

| − | | 2024-04-11 || (BB) || INF/DAV || Data visualization in JavaScript || [[Ljavascript|Lecture]], [[HWjavascript|Homework]] | + | | 2024-04-11 || (BB) || INF/DAV || Data visualization in JavaScript || [[#Ljavascript|Lecture]], [[#HWjavascript|Homework]] |

|- | |- | ||

| || || || Project proposals due Friday April 12 | | || || || Project proposals due Friday April 12 | ||

|- | |- | ||

| − | | || (TV) || BIN || Bioinformatics 3 (genome variants) || [[Lbioinf3|Lecture]], [[HWbioinf3|Homework]] | + | | || (TV) || BIN || Bioinformatics 3 (genome variants) || [[#Lbioinf3|Lecture]], [[#HWbioinf3|Homework]] |

|- | |- | ||

| 2024-04-18 || || || Student Research Conference | | 2024-04-18 || || || Student Research Conference | ||

| Line 33: | Line 33: | ||

| 2024-04-25 || || || No lecture | | 2024-04-25 || || || No lecture | ||

|- | |- | ||

| − | | 2024-05-02 || (BB) || ALL || Python + C++ || [[Lcpp|Lecture]], [[HWcpp|Homework]] | + | | 2024-05-02 || (BB) || ALL || Python + C++ || [[#Lcpp|Lecture]], [[#HWcpp|Homework]] |

|- | |- | ||

| − | | 2024-05-09 || (VB) || ALL || Cloud computing || [[Lcloud|Lecture]], [[HWcloud|Homework]] | + | | 2024-05-09 || (VB) || ALL || Cloud computing || [[#Lcloud|Lecture]], [[#HWcloud|Homework]] |

|- | |- | ||

| 2024-05-16 || || || No lecture | | 2024-05-16 || || || No lecture | ||

| Line 43: | Line 43: | ||

Optional materials: | Optional materials: | ||

* If you are interested in R language, you can look at these lectures and homeworks. Files for the tasks are available on the server. However do not submit these homeworks. | * If you are interested in R language, you can look at these lectures and homeworks. Files for the tasks are available on the server. However do not submit these homeworks. | ||

| − | ** [[Lr1]], [[HWr1]] tutorial to R and basic plotting | + | ** [[#Lr1]], [[#HWr1]] tutorial to R and basic plotting |

| − | ** [[Lr2]], [[HWr2]] statistical tests in R | + | ** [[#Lr2]], [[#HWr2]] statistical tests in R |

=Contact= | =Contact= | ||

'''Instructors''' | '''Instructors''' | ||

| Line 62: | Line 62: | ||

However, the course is open to students from other study programs if they satisfy the following '''informal prerequisites'''. | However, the course is open to students from other study programs if they satisfy the following '''informal prerequisites'''. | ||

* Students should be proficient in '''programming''' in at least one programming language and not afraid to learn new languages. | * Students should be proficient in '''programming''' in at least one programming language and not afraid to learn new languages. | ||

| − | * Students should have basic knowledge of work on the Linux '''command-line''' (at least basic commands for working with files and folders, such as cd, mkdir, cp, mv, rm, chmod). If you do not have these skills, please study our [[Command-line basics|tutorial]] before the second lecture. The first week contains detailed instructions to get you started. | + | * Students should have basic knowledge of work on the Linux '''command-line''' (at least basic commands for working with files and folders, such as cd, mkdir, cp, mv, rm, chmod). If you do not have these skills, please study our [[#Command-line basics|tutorial]] before the second lecture. The first week contains detailed instructions to get you started. |

Although most technologies covered in this course can be used for processing data from many application areas, we will illustrate some of them on examples from bioinformatics. We will explain necessary terminology from biology as needed. | Although most technologies covered in this course can be used for processing data from many application areas, we will illustrate some of them on examples from bioinformatics. We will explain necessary terminology from biology as needed. | ||

| Line 185: | Line 185: | ||

* You can also do projects in pairs, but then we require a larger project and each member should be primarily responsible for a certain part of the project | * You can also do projects in pairs, but then we require a larger project and each member should be primarily responsible for a certain part of the project | ||

| − | More detailed information on projects is on [[Project| a separate page]]. | + | More detailed information on projects is on [[#Project| a separate page]]. |

==Oral exam== | ==Oral exam== | ||

| Line 206: | Line 206: | ||

* When working on the homework and the project, we expect you to use Internet resources, especially various manuals and discussion forums on the used technologies. However, do not try to find ready-made solutions to the given tasks. List all resources used in a homework or a project. | * When working on the homework and the project, we expect you to use Internet resources, especially various manuals and discussion forums on the used technologies. However, do not try to find ready-made solutions to the given tasks. List all resources used in a homework or a project. | ||

| − | * Do not use AI chatbots or AI code generation in your editor (such as Github copilot and similar) for solving homeworks (you do not learn anything that way!). Some use of AI code generation is permitted on the final projects, see [[Project|project rules]]. | + | * Do not use AI chatbots or AI code generation in your editor (such as Github copilot and similar) for solving homeworks (you do not learn anything that way!). Some use of AI code generation is permitted on the final projects, see [[#Project|project rules]]. |

* If we find cases of plagiarism or unauthorized aids, all participating students will receive zero points for the relevant homework or project (including the students who provided their solutions to others to copy). Violations of academic integrity will be also referred to the faculty disciplinary committee. | * If we find cases of plagiarism or unauthorized aids, all participating students will receive zero points for the relevant homework or project (including the students who provided their solutions to others to copy). Violations of academic integrity will be also referred to the faculty disciplinary committee. | ||

| Line 293: | Line 293: | ||

** As a simple editor you can use [https://nano-editor.org/ nano] (see keyboard shortcuts at the bottom of the screen). | ** As a simple editor you can use [https://nano-editor.org/ nano] (see keyboard shortcuts at the bottom of the screen). | ||

** More advanced editors include [https://www.vim.org/ vim], [https://www.gnu.org/software/emacs/ emacs], [https://ne.di.unimi.it/ ne] (read tutorials before starting using them). | ** More advanced editors include [https://www.vim.org/ vim], [https://www.gnu.org/software/emacs/ emacs], [https://ne.di.unimi.it/ ne] (read tutorials before starting using them). | ||

| − | * When working from Windows, you can also connect to the server via WinScp and edit the files using WinScp built-in editors or other editors installed on your computer (see [[Connecting_to_server]]). | + | * When working from Windows, you can also connect to the server via WinScp and edit the files using WinScp built-in editors or other editors installed on your computer (see [[#Connecting_to_server]]). |

| − | * When working from Linux, you can mount your home directory using sshfs and again use editors installed on your computer (see [[Connecting_to_server]]). | + | * When working from Linux, you can mount your home directory using sshfs and again use editors installed on your computer (see [[#Connecting_to_server]]). |

=Command-line basics= | =Command-line basics= | ||

This a brief tutorial for students who are not familiar with Linux command-line. | This a brief tutorial for students who are not familiar with Linux command-line. | ||

| Line 460: | Line 460: | ||

This lecture is a brief introduction to the Perl scripting language. We recommend revisiting necessary parts of this lecture while working on the exercises. | This lecture is a brief introduction to the Perl scripting language. We recommend revisiting necessary parts of this lecture while working on the exercises. | ||

| − | Homework: [[HWperl]] | + | Homework: [[#HWperl]] |

==Why Perl== | ==Why Perl== | ||

| Line 902: | Line 902: | ||

=HWperl= | =HWperl= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | '''Materials:''' [[Lperl|the lecture]], [[Connecting to server]], [[Editors]] | + | '''Materials:''' [[#Lperl|the lecture]], [[#Connecting to server]], [[#Editors]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 942: | Line 942: | ||

'''Running the script from the lecture''' | '''Running the script from the lecture''' | ||

| − | * Consider the program for counting series in the [[Lperl#A_sample_Perl_program|lecture 1]], save it to file <tt>series-stats.pl</tt> | + | * Consider the program for counting series in the [[#Lperl#A_sample_Perl_program|lecture 1]], save it to file <tt>series-stats.pl</tt> |

* Open editor running in the background: <tt>kate series-stats.pl &</tt> | * Open editor running in the background: <tt>kate series-stats.pl &</tt> | ||

* Copy and paste text to the editor, save it | * Copy and paste text to the editor, save it | ||

| Line 977: | Line 977: | ||

'''Introduction''' | '''Introduction''' | ||

| − | * In the rest of the assignment, we will write several scripts for working with [[Lperl#The_second_input_file_for_today:_DNA_sequencing_reads_.28fastq.29|FASTQ files]] introduced in the lecture. Similar scripts are often used by bioinformaticians working with DNA sequencing data. | + | * In the rest of the assignment, we will write several scripts for working with [[#Lperl#The_second_input_file_for_today:_DNA_sequencing_reads_.28fastq.29|FASTQ files]] introduced in the lecture. Similar scripts are often used by bioinformaticians working with DNA sequencing data. |

* We will work with three input files: | * We will work with three input files: | ||

** <tt>/tasks/perl/reads-tiny.fastq</tt> a tiny version of the read file, including some corner cases | ** <tt>/tasks/perl/reads-tiny.fastq</tt> a tiny version of the read file, including some corner cases | ||

| Line 990: | Line 990: | ||

** The sequence itself is on the second line, long sequences can be split into multiple lines. | ** The sequence itself is on the second line, long sequences can be split into multiple lines. | ||

* In our case, the name of the sequence will be the ID of the read with the initial <tt>@</tt> replaced by <tt>></tt> and each <tt>/</tt> replaced by (<tt>_</tt>). | * In our case, the name of the sequence will be the ID of the read with the initial <tt>@</tt> replaced by <tt>></tt> and each <tt>/</tt> replaced by (<tt>_</tt>). | ||

| − | ** You can try to use [http://perldoc.perl.org/perlop.html#Quote-Like-Operators <tt>tr</tt> or <tt>s</tt> regular expression operators] (see also the [[Lperl#Regular_expressions|lecture]]) | + | ** You can try to use [http://perldoc.perl.org/perlop.html#Quote-Like-Operators <tt>tr</tt> or <tt>s</tt> regular expression operators] (see also the [[#Lperl#Regular_expressions|lecture]]) |

* For example, the first two reads of the file <tt>/tasks/perl/reads.fastq</tt> are as follows (only the first 50 columns shown) | * For example, the first two reads of the file <tt>/tasks/perl/reads.fastq</tt> are as follows (only the first 50 columns shown) | ||

<pre> | <pre> | ||

| Line 1,161: | Line 1,161: | ||

=Lbash= | =Lbash= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | [[HWbash]] | + | [[#HWbash]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 1,448: | Line 1,448: | ||

=HWbash= | =HWbash= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | [[Lperl|Lecture on Perl]], [[Lbash|Lecture on command-line tools]] | + | [[#Lperl|Lecture on Perl]], [[#Lbash|Lecture on command-line tools]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 1,609: | Line 1,609: | ||

=Lmake= | =Lmake= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | [[HWmake]] | + | [[#HWmake]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 1,618: | Line 1,618: | ||

* We do not want to keep a command-line window open the whole time; therefore we run such jobs in the background | * We do not want to keep a command-line window open the whole time; therefore we run such jobs in the background | ||

* Simple commands to do it in Linux: | * Simple commands to do it in Linux: | ||

| − | ** To run the program immediately, then switch the whole console to the background: [https://www.gnu.org/software/screen/manual/screen.html screen], [https://tmux.github.io/ tmux] (see a [[Screen|separate section]]) | + | ** To run the program immediately, then switch the whole console to the background: [https://www.gnu.org/software/screen/manual/screen.html screen], [https://tmux.github.io/ tmux] (see a [[#Screen|separate section]]) |

** To run the command when the computer becomes idle: [http://pubs.opengroup.org/onlinepubs/9699919799/utilities/batch.html batch] | ** To run the command when the computer becomes idle: [http://pubs.opengroup.org/onlinepubs/9699919799/utilities/batch.html batch] | ||

* Now we will concentrate on '''[https://en.wikipedia.org/wiki/Oracle_Grid_Engine Sun Grid Engine]''', a complex software for managing many jobs from many users on a cluster consisting of multiple computers | * Now we will concentrate on '''[https://en.wikipedia.org/wiki/Oracle_Grid_Engine Sun Grid Engine]''', a complex software for managing many jobs from many users on a cluster consisting of multiple computers | ||

| Line 1,684: | Line 1,684: | ||

** By pressing <tt>Ctrl-a d</tt> you "detach" the screen, so that both shells (local and <tt>qrsh</tt>) continue running but you can close your local window | ** By pressing <tt>Ctrl-a d</tt> you "detach" the screen, so that both shells (local and <tt>qrsh</tt>) continue running but you can close your local window | ||

** Later by running <tt>screen -r</tt> you get back to your shells | ** Later by running <tt>screen -r</tt> you get back to your shells | ||

| − | ** See more details in the [[screen|separate section]] | + | ** See more details in the [[#screen|separate section]] |

===Running many small jobs=== | ===Running many small jobs=== | ||

| Line 1,807: | Line 1,807: | ||

=HWmake= | =HWmake= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | See also the [[Lmake|lecture]] | + | See also the [[#Lmake|lecture]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 2,089: | Line 2,089: | ||

=Lsql= | =Lsql= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | [[HWsql]] | + | [[#HWsql]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 2,102: | Line 2,102: | ||

'''Outline of this lecture''' | '''Outline of this lecture''' | ||

* We introduce a simple data set. | * We introduce a simple data set. | ||

| − | * In a separate [[Python|tutorial]] for beginners in Python, we have several Python scripts for processing this data set. | + | * In a separate [[#Python|tutorial]] for beginners in Python, we have several Python scripts for processing this data set. |

* We introduce basics of working with SQLite3 and writing SQL queries. | * We introduce basics of working with SQLite3 and writing SQL queries. | ||

* We look at how to combine Python and SQLite. | * We look at how to combine Python and SQLite. | ||

| Line 2,112: | Line 2,112: | ||

* [https://perso.limsi.fr/pointal/_media/python:cours:mementopython3-english.pdf A very concise cheat sheet] | * [https://perso.limsi.fr/pointal/_media/python:cours:mementopython3-english.pdf A very concise cheat sheet] | ||

* [https://docs.python.org/3/tutorial/ A more detailed tutorial] | * [https://docs.python.org/3/tutorial/ A more detailed tutorial] | ||

| − | * [[Python|Our tutorial]] | + | * [[#Python|Our tutorial]] |

'''SQL''' | '''SQL''' | ||

| Line 2,155: | Line 2,155: | ||

'''Notes''' | '''Notes''' | ||

| − | * A different version of this data was used already in the [[Lperl#The_first_input_file_for_today:_TV_series|lecture on Perl]]. | + | * A different version of this data was used already in the [[#Lperl#The_first_input_file_for_today:_TV_series|lecture on Perl]]. |

| − | * A [[Python|Python tutorial]] shows several scripts for processing these files which are basically longer versions of some of the SQL queries below. | + | * A [[#Python|Python tutorial]] shows several scripts for processing these files which are basically longer versions of some of the SQL queries below. |

==SQL and SQLite== | ==SQL and SQLite== | ||

| Line 2,322: | Line 2,322: | ||

* [https://docs.python.org/3/tutorial/ A more detailed tutorial] | * [https://docs.python.org/3/tutorial/ A more detailed tutorial] | ||

| − | We will illustrate basic features of Python on several scripts working with files <tt>series.csv</tt> and <tt>episodes.csv</tt> introduced in the [[Lsql|lecture in SQL]]. | + | We will illustrate basic features of Python on several scripts working with files <tt>series.csv</tt> and <tt>episodes.csv</tt> introduced in the [[#Lsql|lecture in SQL]]. |

* In the first two examples we just use standard Python functions for reading files and split lines into columns by <tt>split</tt> command. | * In the first two examples we just use standard Python functions for reading files and split lines into columns by <tt>split</tt> command. | ||

* This does not work well for <tt>episodes.csv</tt> file where comma sometimes separates columns and sometimes is in quotes within a column. Therefore we use [https://docs.python.org/3/library/csv.html csv module], which is one the the standard Python modules. | * This does not work well for <tt>episodes.csv</tt> file where comma sometimes separates columns and sometimes is in quotes within a column. Therefore we use [https://docs.python.org/3/library/csv.html csv module], which is one the the standard Python modules. | ||

* Alternatively, one could import CSV files via more complex libraries, such as [https://pandas.pydata.org/ Pandas]. | * Alternatively, one could import CSV files via more complex libraries, such as [https://pandas.pydata.org/ Pandas]. | ||

| − | * In [[Lsql|lecture on SQL]] similar tasks as these scripts can be done by very short SQL commands. In Pandas we could also achieve similar results using a few commands. | + | * In [[#Lsql|lecture on SQL]] similar tasks as these scripts can be done by very short SQL commands. In Pandas we could also achieve similar results using a few commands. |

===prog1.py=== | ===prog1.py=== | ||

| Line 2,454: | Line 2,454: | ||

=HWsql= | =HWsql= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | See also the [[Lsql|lecture]] and [[Python|Python tutorial]]. | + | See also the [[#Lsql|lecture]] and [[#Python|Python tutorial]]. |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 2,522: | Line 2,522: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | The '''task''' here is to extend the [[Lsql#SQL_queries|last query in the lecture]], which counts the number of episodes and average rating per each season of each series: | + | The '''task''' here is to extend the [[#Lsql#SQL_queries|last query in the lecture]], which counts the number of episodes and average rating per each season of each series: |

<syntaxhighlight lang="sql"> | <syntaxhighlight lang="sql"> | ||

SELECT seriesId, season, COUNT() AS episode_count, AVG(rating) AS rating | SELECT seriesId, season, COUNT() AS episode_count, AVG(rating) AS rating | ||

| Line 2,608: | Line 2,608: | ||

=Lweb= | =Lweb= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | [[HWweb]] | + | [[#HWweb]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 2,650: | Line 2,650: | ||

* Information you need to extract is located within the structure of the HTML document. | * Information you need to extract is located within the structure of the HTML document. | ||

* To find out, how is the document structured, use <tt>Inspect element</tt> feature in Chrome or Firefox (right click on the text of interest within the website). For example this text on the course webpage is located within <tt>LI</tt> element, which is within <tt>UL</tt> element, which is in 4 nested <tt>DIV</tt> elements, one <tt>BODY</tt> element and one <tt>HTML</tt> element. Some of these elements also have a class (starting with a dot) or an ID (starting with <tt>#</tt>). | * To find out, how is the document structured, use <tt>Inspect element</tt> feature in Chrome or Firefox (right click on the text of interest within the website). For example this text on the course webpage is located within <tt>LI</tt> element, which is within <tt>UL</tt> element, which is in 4 nested <tt>DIV</tt> elements, one <tt>BODY</tt> element and one <tt>HTML</tt> element. Some of these elements also have a class (starting with a dot) or an ID (starting with <tt>#</tt>). | ||

| − | [[File:Web-screenshot1.png|800px]] | + | [[#File:Web-screenshot1.png|800px]] |

| − | [[File:Web-screenshot2.png|800px]] | + | [[#File:Web-screenshot2.png|800px]] |

| Line 2,727: | Line 2,727: | ||

** To get out of <tt>screen</tt> use <tt>Ctrl+A</tt> and then press <tt>D</tt>. | ** To get out of <tt>screen</tt> use <tt>Ctrl+A</tt> and then press <tt>D</tt>. | ||

** To get back in write <tt>screen -r</tt>. | ** To get back in write <tt>screen -r</tt>. | ||

| − | ** More details in [[screen|a separate tutorial]]. | + | ** More details in [[#screen|a separate tutorial]]. |

* All packages are installed on our server. If you use your own laptop, you need to install them using <tt>pip</tt> (preferably in an <tt>virtualenv</tt>). | * All packages are installed on our server. If you use your own laptop, you need to install them using <tt>pip</tt> (preferably in an <tt>virtualenv</tt>). | ||

=HWweb= | =HWweb= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | See [[Lweb|the lecture]] | + | See [[#Lweb|the lecture]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 2,788: | Line 2,788: | ||

=Lbioinf1= | =Lbioinf1= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | [[HWbioinf1]] | + | [[#HWbioinf1]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

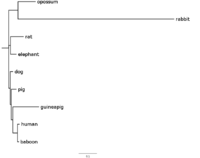

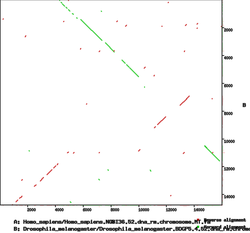

| Line 2,837: | Line 2,837: | ||

===FASTA=== | ===FASTA=== | ||

* FASTA is a format for storing DNA, RNA and protein sequences. | * FASTA is a format for storing DNA, RNA and protein sequences. | ||

| − | * We have already seen FASTA files in [[HWperl|Perl exercises]]. | + | * We have already seen FASTA files in [[#HWperl|Perl exercises]]. |

* Each sequence is given on several lines of the file. The first line starts with ">" followed by an identifier of the sequence and optionally some further description separated by whitespace. | * Each sequence is given on several lines of the file. The first line starts with ">" followed by an identifier of the sequence and optionally some further description separated by whitespace. | ||

* The sequence itself is on the second line; long sequences are split into multiple lines. | * The sequence itself is on the second line; long sequences are split into multiple lines. | ||

| Line 2,849: | Line 2,849: | ||

===FASTQ=== | ===FASTQ=== | ||

* FASTQ is a format for storing sequencing reads, containing DNA sequences but also quality information about each nucleotide. | * FASTQ is a format for storing sequencing reads, containing DNA sequences but also quality information about each nucleotide. | ||

| − | * More in the [[Lperl#The_second_input_file_for_today:_DNA_sequencing_reads_.28fastq.29|lecture on Perl]]. | + | * More in the [[#Lperl#The_second_input_file_for_today:_DNA_sequencing_reads_.28fastq.29|lecture on Perl]]. |

===SAM/BAM=== | ===SAM/BAM=== | ||

| Line 2,872: | Line 2,872: | ||

=HWbioinf1= | =HWbioinf1= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | See also the [[Lbioinf1|lecture]] | + | See also the [[#Lbioinf1|lecture]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 2,924: | Line 2,924: | ||

:: <tt>spades.py -t 1 -m 1 --pe1-1 miseq_R1.fastq.gz --pe1-2 miseq_R2.fastq.gz -o spades > spades.log</tt> | :: <tt>spades.py -t 1 -m 1 --pe1-1 miseq_R1.fastq.gz --pe1-2 miseq_R2.fastq.gz -o spades > spades.log</tt> | ||

* Press <tt>Ctrl-a</tt> followed by <tt>d</tt> | * Press <tt>Ctrl-a</tt> followed by <tt>d</tt> | ||

| − | * This will take you out of <tt>screen</tt> command ([[screen|more details in a separate section]]). | + | * This will take you out of <tt>screen</tt> command ([[#screen|more details in a separate section]]). |

* Run <tt>top</tt> command to check that your command is running. | * Run <tt>top</tt> command to check that your command is running. | ||

| Line 2,976: | Line 2,976: | ||

(a) How many contigs has quast reported in the two assemblies? Does it agree with your counts in Task B? | (a) How many contigs has quast reported in the two assemblies? Does it agree with your counts in Task B? | ||

| − | (b) What is the number of mismatches per 100kb in the two assemblies? Which one is better? Why do you think it is so? (look at the properties of used sequencing technologies in the [[Lbioinf1#Overview_of_DNA_sequencing_and_assembly|lecture]]) | + | (b) What is the number of mismatches per 100kb in the two assemblies? Which one is better? Why do you think it is so? (look at the properties of used sequencing technologies in the [[#Lbioinf1#Overview_of_DNA_sequencing_and_assembly|lecture]]) |

(c) What portion of the reference sequence is covered by the two assemblies (reported as <tt>genome fraction</tt>)? Which assembly is better in this aspect? | (c) What portion of the reference sequence is covered by the two assemblies (reported as <tt>genome fraction</tt>)? Which assembly is better in this aspect? | ||

| Line 3,051: | Line 3,051: | ||

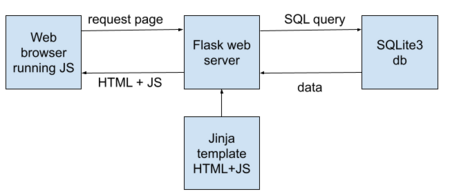

=Lflask= | =Lflask= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | [[HWflask]] | + | [[#HWflask]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 3,089: | Line 3,089: | ||

However, if you are running flask on a server, you probably want to run the web browser on your local machine. In such case, you need to use '''ssh tunnel''' to channel the traffic through ssh connection: | However, if you are running flask on a server, you probably want to run the web browser on your local machine. In such case, you need to use '''ssh tunnel''' to channel the traffic through ssh connection: | ||

* On your local machine, open another console window and create an ssh tunnel as follows: <tt>ssh -L PORT:localhost:PORT username@vyuka.compbio.fmph.uniba.sk</tt> (replace PORT with the port number you have selected to run Flask) | * On your local machine, open another console window and create an ssh tunnel as follows: <tt>ssh -L PORT:localhost:PORT username@vyuka.compbio.fmph.uniba.sk</tt> (replace PORT with the port number you have selected to run Flask) | ||

| − | * For Windows machines, -L option works out of box in Ubuntu subsystem for Windows or Powershell ssh, see [[Connecting to server]]. | + | * For Windows machines, -L option works out of box in Ubuntu subsystem for Windows or Powershell ssh, see [[#Connecting to server]]. |

* (STRONGLY NOT RECOMMENDED, USE POWERSHELL INSTEAD) If you use Putty on Windows, follow a [https://blog.devolutions.net/2017/04/how-to-configure-an-ssh-tunnel-on-putty tutorial] how to create an ssh tunnel. Destination should be localhost:PORT, source port should be PORT. Do not forget to click add. | * (STRONGLY NOT RECOMMENDED, USE POWERSHELL INSTEAD) If you use Putty on Windows, follow a [https://blog.devolutions.net/2017/04/how-to-configure-an-ssh-tunnel-on-putty tutorial] how to create an ssh tunnel. Destination should be localhost:PORT, source port should be PORT. Do not forget to click add. | ||

* Keep this ssh connection open while you need to access your Flask web pages; it makes port PORT available on your local machine | * Keep this ssh connection open while you need to access your Flask web pages; it makes port PORT available on your local machine | ||

| Line 3,124: | Line 3,124: | ||

print(t) | print(t) | ||

# prints: | # prints: | ||

| − | # [[1 0 0 1 1 0 0 0 0] | + | # [[#1 0 0 1 1 0 0 0 0] |

# [0 1 1 0 0 2 1 1 1]] | # [0 1 1 0 0 2 1 1 1]] | ||

| Line 3,138: | Line 3,138: | ||

<pre> | <pre> | ||

>>> import numpy as np | >>> import numpy as np | ||

| − | >>> a = np.array([[1,2,3],[4,5,6]]) | + | >>> a = np.array([[#1,2,3],[4,5,6]]) |

| − | >>> b = np.array([[7,8],[9,10],[11,12]]) | + | >>> b = np.array([[#7,8],[9,10],[11,12]]) |

>>> a | >>> a | ||

| − | array([[1, 2, 3], | + | array([[#1, 2, 3], |

[4, 5, 6]]) | [4, 5, 6]]) | ||

>>> b | >>> b | ||

| − | array([[ 7, 8], | + | array([[# 7, 8], |

[ 9, 10], | [ 9, 10], | ||

[11, 12]]) | [11, 12]]) | ||

| Line 3,152: | Line 3,152: | ||

<pre> | <pre> | ||

>>> 3 * a | >>> 3 * a | ||

| − | array([[ 3, 6, 9], | + | array([[# 3, 6, 9], |

[12, 15, 18]]) | [12, 15, 18]]) | ||

>>> a + 3 * a | >>> a + 3 * a | ||

| − | array([[ 4, 8, 12], | + | array([[# 4, 8, 12], |

[16, 20, 24]]) | [16, 20, 24]]) | ||

</pre> | </pre> | ||

| Line 3,178: | Line 3,178: | ||

=HWflask= | =HWflask= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | See [[Lflask|the lecture]] | + | See [[#Lflask|the lecture]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 3,218: | Line 3,218: | ||

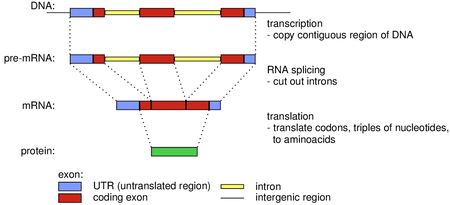

=Lbioinf2= | =Lbioinf2= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | [[HWbioinf2]] | + | [[#HWbioinf2]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 3,256: | Line 3,256: | ||

* [https://genome.ucsc.edu/FAQ/FAQformat.html#format1 BED format] is used to describe location of arbitrary elements in the genome. It has variable number of columns: the first 3 columns with sequence name, start and end are compulsory, but more columns can be added with more details if available. | * [https://genome.ucsc.edu/FAQ/FAQformat.html#format1 BED format] is used to describe location of arbitrary elements in the genome. It has variable number of columns: the first 3 columns with sequence name, start and end are compulsory, but more columns can be added with more details if available. | ||

=HWbioinf2= | =HWbioinf2= | ||

| − | See also the [[Lbioinf2|lecture]]. | + | See also the [[#Lbioinf2|lecture]]. |

Submit the protocol and the required files to <tt>/submit/bioinf2</tt> | Submit the protocol and the required files to <tt>/submit/bioinf2</tt> | ||

| Line 3,285: | Line 3,285: | ||

The results of gene finding are in the GTF format. Examine the files and try to find the answers to the following questions using command-line tools. | The results of gene finding are in the GTF format. Examine the files and try to find the answers to the following questions using command-line tools. | ||

| − | (a) How many exons are in each of the two GTF files? (Beware: simply using <tt>grep</tt> with pattern <tt>CDS</tt> may yield lines containing this string in a different column. You can use e.g. techniques from the [[Lbash|lecture]] and [[HWbash|exercises]] on command-line tools). | + | (a) How many exons are in each of the two GTF files? (Beware: simply using <tt>grep</tt> with pattern <tt>CDS</tt> may yield lines containing this string in a different column. You can use e.g. techniques from the [[#Lbash|lecture]] and [[#HWbash|exercises]] on command-line tools). |

(b) How many genes are in each of the two GTF files? (The files contain rows with word <tt>gene</tt> in the second column, one for each gene) | (b) How many genes are in each of the two GTF files? (The files contain rows with word <tt>gene</tt> in the second column, one for each gene) | ||

| Line 3,304: | Line 3,304: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | * Then sort the resulting SAM file using samtools, store it as a BAM file and create its index, similarly as in the [[HWbioinf1|previous homework]]. | + | * Then sort the resulting SAM file using samtools, store it as a BAM file and create its index, similarly as in the [[#HWbioinf1|previous homework]]. |

* In addition to the BAM file, we produced a file <tt>rnaseq-star.SJ.out.tab</tt> containing the position of detected introns (here called splice junctions or splices). Find in the [https://github.com/alexdobin/STAR/blob/master/doc/STARmanual.pdf manual of STAR] description of this file. | * In addition to the BAM file, we produced a file <tt>rnaseq-star.SJ.out.tab</tt> containing the position of detected introns (here called splice junctions or splices). Find in the [https://github.com/alexdobin/STAR/blob/master/doc/STARmanual.pdf manual of STAR] description of this file. | ||

* There are also additional files with logs and statistics. | * There are also additional files with logs and statistics. | ||

| Line 3,350: | Line 3,350: | ||

=Ljavascript= | =Ljavascript= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | [[HWjavascript]] | + | [[#HWjavascript]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 3,382: | Line 3,382: | ||

* Debugging tip 2: Viewing page source code (via Ctrl+U), can show you what you sent to browser. | * Debugging tip 2: Viewing page source code (via Ctrl+U), can show you what you sent to browser. | ||

* Debugging tip 3: If you open devtools (Ctrl+Shift+J in Chrome, F12 in Firefox) and go to the Console tab, you can see individual Javacript errors. | * Debugging tip 3: If you open devtools (Ctrl+Shift+J in Chrome, F12 in Firefox) and go to the Console tab, you can see individual Javacript errors. | ||

| − | * Consult the [[Lflask|previous lecture]] on running and accessing Flask applications. | + | * Consult the [[#Lflask|previous lecture]] on running and accessing Flask applications. |

== Using multiple charts in the same page (merging examples) == | == Using multiple charts in the same page (merging examples) == | ||

| Line 3,484: | Line 3,484: | ||

=HWjavascript= | =HWjavascript= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | See [[Ljavascript|the lecture]] | + | See [[#Ljavascript|the lecture]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 3,509: | Line 3,509: | ||

=Lbioinf3= | =Lbioinf3= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | [[HWbioinf3]] | + | [[#HWbioinf3]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 3,521: | Line 3,521: | ||

==Finding polymorphisms / genome variants== | ==Finding polymorphisms / genome variants== | ||

* We compare sequencing reads coming from an individual to a reference genome of the species. | * We compare sequencing reads coming from an individual to a reference genome of the species. | ||

| − | * First we align them, as in [[HWbioinf1|the exercises on genome assembly]]. | + | * First we align them, as in [[#HWbioinf1|the exercises on genome assembly]]. |

* Then we look for positions where a substantial fraction of the reads does not agree with the reference (this process is called variant calling). | * Then we look for positions where a substantial fraction of the reads does not agree with the reference (this process is called variant calling). | ||

==Programs and file formats== | ==Programs and file formats== | ||

| − | * For mapping, we will use <tt>[https://github.com/lh3/bwa BWA-MEM]</tt> (you can also try Minimap2, as in [[HWbioinf1|the exercises on genome assembly]]). | + | * For mapping, we will use <tt>[https://github.com/lh3/bwa BWA-MEM]</tt> (you can also try Minimap2, as in [[#HWbioinf1|the exercises on genome assembly]]). |

* For variant calling, we will use [https://github.com/ekg/freebayes FreeBayes]. | * For variant calling, we will use [https://github.com/ekg/freebayes FreeBayes]. | ||

| − | * For reads and read alignments, we will use FASTQ and BAM files, as in the [[Lbioinf1|previous lectures]]. | + | * For reads and read alignments, we will use FASTQ and BAM files, as in the [[#Lbioinf1|previous lectures]]. |

* For storing found variants, we will use [http://www.internationalgenome.org/wiki/Analysis/vcf4.0/ VCF files]. | * For storing found variants, we will use [http://www.internationalgenome.org/wiki/Analysis/vcf4.0/ VCF files]. | ||

| − | * For storing genome intervals, we will use [https://genome.ucsc.edu/FAQ/FAQformat.html#format1 BED files] as in the [[Lbioinf2|previous lecture]]. | + | * For storing genome intervals, we will use [https://genome.ucsc.edu/FAQ/FAQformat.html#format1 BED files] as in the [[#Lbioinf2|previous lecture]]. |

==Human variants== | ==Human variants== | ||

| Line 3,575: | Line 3,575: | ||

=HWbioinf3= | =HWbioinf3= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | See also the [[Lbioinf3|lecture]] | + | See also the [[#Lbioinf3|lecture]] |

Submit the protocol and the required files to <tt>/submit/bioinf3</tt> | Submit the protocol and the required files to <tt>/submit/bioinf3</tt> | ||

| Line 3,649: | Line 3,649: | ||

===Task C: Examining larger vcf files=== | ===Task C: Examining larger vcf files=== | ||

| − | In this task, we will look at <tt>motherChr12.vcf</tt> and <tt>fatherChr12.vcf</tt> files and compute various statistics. You can use command-line tools, such as <tt>grep, wc, sort, uniq</tt> and Perl one-liners (as in [[Lbash]]), or you can write small scripts in Perl or Python (as in [[Lperl]] and [[Lsql]]). | + | In this task, we will look at <tt>motherChr12.vcf</tt> and <tt>fatherChr12.vcf</tt> files and compute various statistics. You can use command-line tools, such as <tt>grep, wc, sort, uniq</tt> and Perl one-liners (as in [[#Lbash]]), or you can write small scripts in Perl or Python (as in [[#Lperl]] and [[#Lsql]]). |

* Write all used commands to your protocol. | * Write all used commands to your protocol. | ||

| Line 3,690: | Line 3,690: | ||

=Lcpp= | =Lcpp= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | [[HWcpp]] | + | [[#HWcpp]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 3,746: | Line 3,746: | ||

=HWcpp= | =HWcpp= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | See [[Lcpp|the lecture]] | + | See [[#Lcpp|the lecture]] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 3,916: | Line 3,916: | ||

If you get "AccessDeniedException: 403 The billing account for the owning project is disabled in state absent", you should open project in web UI (console.cloud.google.com), head to page Billing -> Link billing account and select "XY for Education". | If you get "AccessDeniedException: 403 The billing account for the owning project is disabled in state absent", you should open project in web UI (console.cloud.google.com), head to page Billing -> Link billing account and select "XY for Education". | ||

| − | [[File:Billing.png.png||800px]] | + | [[#File:Billing.png.png||800px]] |

==Apache beam and Dataflow== | ==Apache beam and Dataflow== | ||

| Line 4,055: | Line 4,055: | ||

=HWcloud= | =HWcloud= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | See also the [[Lcloud|lecture]] | + | See also the [[#Lcloud|lecture]] |

Deadline: 20th May 2024 9:00 | Deadline: 20th May 2024 9:00 | ||

| Line 4,096: | Line 4,096: | ||

=Lr1= | =Lr1= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | [[HWr1]] {{Dot}} [https://youtu.be/qHdtopqSiXA Video introduction from an older edition of the course] | + | [[#HWr1]] {{Dot}} [https://youtu.be/qHdtopqSiXA Video introduction from an older edition of the course] |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 4,179: | Line 4,179: | ||

=HWr1= | =HWr1= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | See also the [[Lr1|lecture]]. | + | See also the [[#Lr1|lecture]]. |

<!-- /NOTEX --> | <!-- /NOTEX --> | ||

| Line 4,494: | Line 4,494: | ||

=Lr2= | =Lr2= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | [[HWr2]] | + | [[#HWr2]] |

The topic of this lecture are statistical tests in R. | The topic of this lecture are statistical tests in R. | ||

| Line 4,640: | Line 4,640: | ||

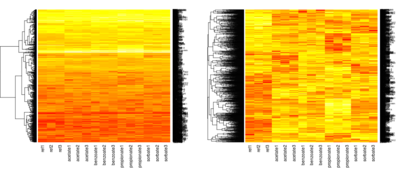

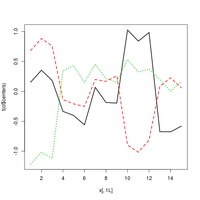

We will apply Welch's t-test to microarray data | We will apply Welch's t-test to microarray data | ||

| − | * Data from the same paper as in [[Lr1#Gene_expression_data|the previous lecture]], i.e. Abbott et al 2007 [http://femsyr.oxfordjournals.org/content/7/6/819.abstract Generic and specific transcriptional responses to different weak organic acids in anaerobic chemostat cultures of ''Saccharomyces cerevisiae''] | + | * Data from the same paper as in [[#Lr1#Gene_expression_data|the previous lecture]], i.e. Abbott et al 2007 [http://femsyr.oxfordjournals.org/content/7/6/819.abstract Generic and specific transcriptional responses to different weak organic acids in anaerobic chemostat cultures of ''Saccharomyces cerevisiae''] |

* Recall: Gene expression measurements under 5 conditions: | * Recall: Gene expression measurements under 5 conditions: | ||

** Control: yeast grown in a normal environment | ** Control: yeast grown in a normal environment | ||

| Line 4,647: | Line 4,647: | ||

* Together our table has 15 columns (3 replicates from 5 conditions) and 6398 rows (genes). Last time we have used only a subset of rows. | * Together our table has 15 columns (3 replicates from 5 conditions) and 6398 rows (genes). Last time we have used only a subset of rows. | ||

* We will test statistical difference between the control condition and one of the acids (3 numbers vs other 3 numbers). | * We will test statistical difference between the control condition and one of the acids (3 numbers vs other 3 numbers). | ||

| − | * See Task B in [[HWr2|the exercises]]. | + | * See Task B in [[#HWr2|the exercises]]. |

==Multiple testing correction== | ==Multiple testing correction== | ||

| Line 4,677: | Line 4,677: | ||

=HWr2= | =HWr2= | ||

<!-- NOTEX --> | <!-- NOTEX --> | ||

| − | See also the [[Lr2|current]] and the [[Lr1|previous]] lecture. | + | See also the [[#Lr2|current]] and the [[#Lr1|previous]] lecture. |

* Do either tasks A,B,C (beginners) or B,C,D (more advanced). You can also do all four for bonus credit. | * Do either tasks A,B,C (beginners) or B,C,D (more advanced). You can also do all four for bonus credit. | ||

| Line 4,748: | Line 4,748: | ||

* Run the test for all four acids. | * Run the test for all four acids. | ||

* '''Report''' for each acid how many genes were significant with p-value at most 0.01. | * '''Report''' for each acid how many genes were significant with p-value at most 0.01. | ||

| − | ** See [[HWr1#Vector_arithmetic|Vector arithmetic in HWr1]] | + | ** See [[#HWr1#Vector_arithmetic|Vector arithmetic in HWr1]] |

** You can count <tt>TRUE</tt> items in a vector of booleans by <tt>sum</tt>, e.g. <tt>sum(TRUE,FALSE,TRUE)</tt> is 2. | ** You can count <tt>TRUE</tt> items in a vector of booleans by <tt>sum</tt>, e.g. <tt>sum(TRUE,FALSE,TRUE)</tt> is 2. | ||

* '''Report''' also how many genes are significant for both acetic and benzoate acids simultaneously (logical "and" is written as <tt>&</tt>). | * '''Report''' also how many genes are significant for both acetic and benzoate acids simultaneously (logical "and" is written as <tt>&</tt>). | ||

Latest revision as of 04:21, 21 July 2024

Website for 2023/24

| 2024-02-22 | (BB) | ALL | Introduction to the course | #Contact, #Introduction, #Rules, #Connecting to server, #Editors, #Command-line basics |

| Introduction to Perl | Lecture, Homework | |||

| 2024-02-29 | (BB) | ALL | Command-line tools, Perl one-liners | Lecture, Homework |

| 2024-03-07 | (TV) | ALL | Job scheduling and make | Lecture, Homework |

| 2024-03-14 | (TV) | ALL | SQL (and Python) for beginners | #Project, Lecture, #Python, Homework |

| 2024-03-21 | (VB) | INF/DAV | Python, web crawling, HTML parsing, sqlite3 | Lecture, Homework, #Screen |

| (TV) | BIN | Bioinformatics 1 (sequencing and genome assembly) | Lecture, Homework | |

| 2024-03-28 | Easter | |||

| 2024-04-04 | (VB) | INF/DAV | Text data processing, flask | Lecture, Homework |

| (TV) | BIN | Bioinformatics 2 (gene finding, RNA-seq) | Lecture, Homework | |

| 2024-04-11 | (BB) | INF/DAV | Data visualization in JavaScript | Lecture, Homework |

| Project proposals due Friday April 12 | ||||

| (TV) | BIN | Bioinformatics 3 (genome variants) | Lecture, Homework | |

| 2024-04-18 | Student Research Conference | |||

| 2024-04-25 | No lecture | |||

| 2024-05-02 | (BB) | ALL | Python + C++ | Lecture, Homework |

| 2024-05-09 | (VB) | ALL | Cloud computing | Lecture, Homework |

| 2024-05-16 | No lecture |

Optional materials:

- If you are interested in R language, you can look at these lectures and homeworks. Files for the tasks are available on the server. However do not submit these homeworks.

Contents

- 1 Contact

- 2 Introduction

- 3 Rules

- 4 Connecting to server

- 5 Editors

- 6 Command-line basics

- 7 Lperl

- 7.1 Why Perl

- 7.2 Hello world

- 7.3 The first input file for today: TV series

- 7.4 A sample Perl program

- 7.5 The second input file for today: DNA sequencing reads (fastq)

- 7.6 Variables, types

- 7.7 Strings

- 7.8 Regular expressions

- 7.9 Conditionals, loops

- 7.10 Input, output

- 7.11 Sources of Perl-related information

- 7.12 Further optional topics

- 8 HWperl

- 9 Lbash

- 9.1 Efficient use of the Bash command line

- 9.2 Redirecting and pipes

- 9.3 Text file manipulation

- 9.3.1 Commands echo and cat (creating and printing files)

- 9.3.2 Commands head and tail (looking at start and end of files)

- 9.3.3 Commands wc, ls -lh, od (exploring file statistics and details)

- 9.3.4 Command grep (getting lines matching a regular expression)

- 9.3.5 Commands sort, uniq

- 9.3.6 Commands diff, comm (comparing files)

- 9.3.7 Commands cut, paste, join (working with columns)

- 9.3.8 Commands split, csplit (splitting files to parts)

- 9.4 Programs sed and awk

- 9.5 Perl one-liners

- 10 HWbash

- 11 Lmake

- 12 HWmake

- 13 Project

- 14 Lsql

- 15 Python

- 16 HWsql

- 17 Lweb

- 18 HWweb

- 19 Screen

- 20 Lbioinf1

- 21 HWbioinf1

- 22 Lflask

- 23 HWflask

- 24 Lbioinf2

- 25 HWbioinf2

- 26 Ljavascript

- 27 HWjavascript

- 28 Lbioinf3

- 29 HWbioinf3

- 30 Lcpp

- 31 HWcpp

- 32 Lcloud

- 33 HWcloud

- 34 Lr1

- 35 HWr1

- 36 Lr2

- 37 HWr2

Contact

Instructors

- Mgr. Vladimír Boža, PhD.

- doc. Mgr. Broňa Brejová, PhD.

- doc. Mgr. Tomáš Vinař, PhD.

- MSc. Fatana Jafari

- Contact by us by email with questions or requests for longer consultations

Schedule

- Thursday 14:50-17:10 I-H6 lecture + start solving tasks with our help

Introduction

Target audience

This course is offered at the Faculty of Mathematics, Physics and Informatics, Comenius University in Bratislava for the students of the second year of the bachelor Data Science and Bioinformatics study programs and the students of the bachelor and master Computer Science study programs. It is a prerequisite of the master-level state exam in Bioinformatics and Machine Learning.

However, the course is open to students from other study programs if they satisfy the following informal prerequisites.

- Students should be proficient in programming in at least one programming language and not afraid to learn new languages.

- Students should have basic knowledge of work on the Linux command-line (at least basic commands for working with files and folders, such as cd, mkdir, cp, mv, rm, chmod). If you do not have these skills, please study our tutorial before the second lecture. The first week contains detailed instructions to get you started.

Although most technologies covered in this course can be used for processing data from many application areas, we will illustrate some of them on examples from bioinformatics. We will explain necessary terminology from biology as needed.

Course objectives

Quick summary

- Learn different languages and technologies for data processing tasks:

- obtaining data,

- preprocessing it to suitable form,

- connecting existing tools into pipelines,

- performing statistical tests and data visualization.

- More details on statistical methods, visualization and machine learning are in different courses.

- These tasks are fundamental in data science and bioinformatics, but also useful in many areas of computer science, where experimental evaluation and comparison of methods is needed.

- Rather than learning one set of tools in detail, we give overview of many different ones.

- Main reason is improving your flexibility so that you can quickly learn new language or library in future.

More details

Computer science courses cover many interesting algorithms, models and methods that can used for data analysis. However, when you want to use these methods for real data, you will typically need to make considerable efforts to obtain the data, pre-process it into a suitable form, test and compare different methods or settings, and arrange the final results in informative tables and graphs. Often, these activities need to be repeated for different inputs, different settings, and so on. Many jobs in data science and bioinformatics involve data processing using existing tools and small custom scripts. This course will cover some programming languages and technologies suitable for such activities.

This course is also recommended for students whose bachelor or master theses involve substantial empirical experiments (e.g. experimental evaluation of your methods and comparison with other methods on real or simulated data).

We do not aim to teach you in detail one specific language or technology. Rather, we give you an overview of many different options, often doing a different language each week. One of the goals is to increase your flexibility so that you can quickly adapt when you need to use something new.

Basic guidelines for working with data

As you know, in programming it is recommended to adhere to certain practices, such as good coding style, modular design, thorough testing etc. Such practices add a little extra work, but are much more efficient in the long run. Similar good practices exist for data analysis. As an introduction we recommend the following article by a well-known bioinformatician William Stafford Noble, but his advice applies outside of bioinformatics as well.

- Noble WS. A quick guide to organizing computational biology projects. PLoS Comput Biol. 2009 Jul 31;5(7):e1000424.

Several important recommendations:

- Noble 2009: "Everything you do, you will probably have to do over again."

- After doing an entire analysis, you often find out that there was a problem with the input data or one of the early steps, and therefore everything needs to be redone.

- Therefore it is better to use techniques that allow you to keep all details of your workflow and to repeat them if needed.

- Try to avoid manually changing files, because this makes rerunning analyses harder and more error-prone.

- Document all steps of your analysis

- Note what have you done, why have you done it, what was the result.

- Some of these things may seem obvious to you at present, but you may forgot them in a few weeks or months and you may need them to write up your thesis or to repeat the analysis.

- Good documentation is also indispensable for collaborative projects.

- Keep a logical structure of your files and folders

- Their names should be indicative of the contents (create a sensible naming scheme).

- However, if you have too many versions of the experiment, it may be easier to name them by date rather than create new long names (your notes should then detail the meaning of each dated version).

- Try to detect problems in the data

- Big files often hide some problems in the format, unexpected values etc. These may confuse your programs and make the results meaningless.

- In your scripts, check that the input data conform to your expectations (format, values in reasonable ranges etc).

- In unexpected circumstances, scripts should terminate with an error message and a non-zero exit code.

- If your script executes another program, check its exit code.

- Also check intermediate results as often as possible (by manual inspection, computing various statistics etc) to detect errors in the data and your code.

Rules

Grading

- Homeworks: 45%

- Project proposal: 5%

- Project: 40%

- Oral exam: 10%

Grades:

- A: 90 and more, B: 80...89, C: 70...79, D: 60...69, E: 50...59, FX: less than 50%

You will get Fx if your oral exam is not satisfactory, even if you have sufficient points from other activities.

Course format

- Every Thursday three-hour class, we start with a short lecture. Then you start solving assigned tasks, which you complete as a homework assignment.

- We highly recommend doing the homework during class, as we can help you as needed. We really encourage you to ask questions during this time. At other times, ask your questions via email, but you may have to wait longer for the answer.

- If you attend the whole 3-hour class, you are allowed to work on the homework in a pair with one of your classmates. You can then also finish the homework together after the class and submit just one homework listing both your names.

- During work, occasionally exchange roles (driver who is typing the program or commands and navigator who is advising the driver). Both students should completely understand all parts of the submitted homework.

- If you do not attend the class (or only a part of it), you have to do the homework individually.

- Some weeks will have a separate material for Bioinformatics program and separately for others. If you would like to do a homework other than the one intended for you, you must obtain a prior consent of the instructors.

- You will submit a project during the exam period. Afterwards there will be an oral exam concentrating on the project and submitted homework.

- You will have an account on a Linux server dedicated to this course. Use this account only for the purposes of this course and try not to overload the server so that it serves all students. Any attempts to disrupt the operation of the server will be considered a serious violation of the course rules.

Homework

- The deadline for each homework is 9:00 of the day of the next lecture, i.e. usually almost one week from when the homework was published.

- You can work on your homework on any computer, preferably under Linux. However, the submitted code or commands should be executable on the course server, so do not use special software or settings on your computer.

- The homework is submitted by copying the required files to the required directory on the server. Details will be specified in the assignment.

- Follow any filenames specified in the assignment and use reasonable filenames for additional files.

- Make sure the submitted source code is easy to read (indentation, reasonable variable names, comments as needed)

Protocols

- Usually, a document called a protocol will be a required part of the homework.

- Write the protocol in txt format and name the file protocol.txt (copy it to the upload directory)

- The protocol can be in Slovak or English.

- If you write with diacritics, use UTF8 encoding, but feel free to omit diacritics in protocols.

- In most homeworks, you get a protocol outline, follow it and do not change our headings.

Self-assessment

- At the top of the protocol, fill in the names of the authors of the homework. This is very important if you work in a pair.

- Below fill in a self-assessment which for every task should contain one of the following codes.

- Use code DONE if you think the task is completely and correctly solved.

- Use code PART if you have completed only a part of the task. After the code briefly state, which part was completed and potentially if you had problem with something.

- Use code UNSURE, if you have completed the task but are not sure about something. Again briefly explain what are you unsure of.

- Use code NOTHING, if you have not even started to do the task.

- Your self-assessment will guide us in grading. Tasks marked as DONE will be checked briefly, but we will try to give you feedback to tasks marked UNSURE or PART, particularly if you note down what was causing you problems.

- Try to fill in self-assessment the best you can. It can influence your grade.

Protocol contents

- Unless specified otherwise, the protocol should contain the following information:

- List of submitted files: usually already filled in, add any other files you submit with a brief explanation of their meaning.

- The sequence of commands used, or other steps you took to get the results. Include commands to process data and run your or other programs. It is not necessary to specify commands related to the programming itself (starting the editor, setting file permissions), copying the files to the server, etc. For more complex commands, also provide brief comments explaining the purpose of a particular command or group of commands.

- Observations the results required by the tasks and any discussion of these results.

- Resources: websites and other sources that you used to solve the task. You do not have to list the course website and resources recommended directly in the assignment.

Overall, the protocol should allow the reader to understand your files and also, in case of interest, to perform the same calculations as you used to obtain the result. You do not have write to a formal text, only clear and brief notes.

Project

The aim of the project is to extend your skills on a data processing project. Your task is to obtain data, analyze this data with some techniques from the lectures and display the results in graphs and tables.

- In about two thirds of the semester, you will submit a short project proposal

- A deadline for submitting the project (including the written report) will be during the exam period

- You can also do projects in pairs, but then we require a larger project and each member should be primarily responsible for a certain part of the project

More detailed information on projects is on a separate page.

Oral exam

- During the oral exam, we will give you our feedback to your project and ask related questions.

- We can also ask you about some of the homeworks you have submitted during the semester.

- You should be able to explain your code and do small modifications in it.

Details:

- Before the exam we will publish preliminary time schedule.

- Please come to the computer room where the exam takes place at the indicated time, start one of the computers and open your project in a form in which you can modify it. You may also use your computer if you prefer.

- One of us will talk with you and assign you a small task related to your project or homeworks.

- You will have time to work on this task. You may use course website and internet resources, such as documentation and existing discussions. You may not communicate with other people or use AI tools during the oral exam.

- Please leave any resources you used open in the browser.

Academic integrity

- You are allowed to talk to classmates and other people about homework and projects and general strategies to solve them. However, the code, the results obtained, and the text you submit must be your own work. It is forbidden to show your code or texts to the classmates.

- When working on the homework and the project, we expect you to use Internet resources, especially various manuals and discussion forums on the used technologies. However, do not try to find ready-made solutions to the given tasks. List all resources used in a homework or a project.

- Do not use AI chatbots or AI code generation in your editor (such as Github copilot and similar) for solving homeworks (you do not learn anything that way!). Some use of AI code generation is permitted on the final projects, see project rules.

- If we find cases of plagiarism or unauthorized aids, all participating students will receive zero points for the relevant homework or project (including the students who provided their solutions to others to copy). Violations of academic integrity will be also referred to the faculty disciplinary committee.

Sharing materials

Assignments and materials for the course are freely available on this webpage. However, do not publish or otherwise share your homework solutions as they closely follow the outline given by us. You can publish your projects if you wish, as long as it does not conflict with your agreement with the provider of your data.

Connecting to server

In the course, you will be working on a Linux server. You can connect to this server via ssh, using the same username and password as for AIS2. In the computer classroom at the faculty, we recommend connecting to the server from Linux.

Connection through ssh

If connecting from a Linux computer, open a console (command-line window) and run:

ssh your_username@vyuka.compbio.fmph.uniba.sk -XC

The server will prompt you for password, but it will not display anything while you type. Just type your password and press Enter.

If connecting from a Windows 10 or Windows 11 computer, open command-line window in Ubuntu subsystem for Windows or Powershell or cmd.exe Command Prompt and run

ssh your_username@vyuka.compbio.fmph.uniba.sk

When prompted, type your password and press Enter, as for Linux. See also more detailed instructions here [1] or here [2].

For Windows, this command allows text-only connection, which is sufficient for most of the course. To enable support for graphics applications, follow the instructions in the next section.

Installation of X server on Windows

This is not needed for Linux, just use -XC option in ssh.

To use applications with GUIs, you need to tunnel X-server commands from the server to your local machine (this is accomplished by your ssh client), and you need a program that can interpret these commands on you local machine (this is called X server).

- Install putty client which you will use instead of ssh.

- Install X server, such as xming

- Make sure that X server is running (you should have "X" icon in your app control bar)

- Run putty, connect using ssh connection type and in your settings choose Connection->SSH->X11 and check "Enable X11 forwarding" box

- Login to the vyuka.compbio.fmph.uniba.sk server in putty

- echo $DISPLAY command on the server should show a non-empty string (e.g. localhost:11.0)

- Try running xeyes &: this simple testing application should display a pair of eyes tracking your mouse cursor

Copying files to/from the server via scp or WinSCP

- You can copy files using scp command on the command line, both in Windows and Linux.

- Alternatively use the graphical WinSCP program for Windows.

Examples of using scp command

# copies file protocol.txt to /submit/perl/username on server scp protocol.txt username@vyuka.compbio.fmph.uniba.sk:/submit/perl/username/ # copies file protocol.txt to the home folder of the user on the server scp protocol.txt username@vyuka.compbio.fmph.uniba.sk: # copies file protocol.txt from home directory at the server to the current folder on the local computer scp username@vyuka.compbio.fmph.uniba.sk:protocol.txt . # copies folder /tasks/perl from the server to the current folder on the local computer # notice -r option for copying whole directories scp -r username@vyuka.compbio.fmph.uniba.sk:/tasks/perl .

Mounting files from Linux server to your Linux computer via sshfs

On Linux, you can mount the filesystem from the server as a directory on your machine using sshfs tool, and then work with it as with local folders, using both command-line and graphical file managers and editors.

An example of using sshfs command for mounting a folder from a remote server:

mkdir vyuka # create an empty folder with an arbitrary name sshfs username@vyuka.compbio.fmph.uniba.sk: vyuka # mounting the remote folder to the empty folder

After running these commands, folder vyuka will contain your home folder on vyuka server. You can copy files to and from the server and even open them in editors as if they were on your computer, however with network-related slowdown.

MacOS

- Command ssh in text mode should work on MacOS.

- For GUIs you need an X server, try installing XQuartz.

- Alternatively, you can install sshfs from macFUSE and mount vyuka as shown above.

Editors

- During the course, you will have to edit scripts, protocols and other files.

- Our server has several editors installed.

- If you can use graphical interface, we recommend kate, which is used similarly as Windows-based editors.

- If you need to work in a text-only mode (slow connection or no X support):

- When working from Windows, you can also connect to the server via WinScp and edit the files using WinScp built-in editors or other editors installed on your computer (see #Connecting_to_server).

- When working from Linux, you can mount your home directory using sshfs and again use editors installed on your computer (see #Connecting_to_server).

Command-line basics

This a brief tutorial for students who are not familiar with Linux command-line.

Files and folders

- Images, texts, data, etc. are stored in files.

- Files are grouped in folders (directories) for better organization.

- A folder can also contain other folders, forming a tree structure.

Moving around folders (ls, cd)

- One folder is always selected as the current one; it is shown on the command line

- The list of files and folders in the current folder can be obtained with the ls command

- The list of files in some other folder can be obtained with the command ls other_folder

- The command cd new_folder changes the current folder to the specified new folder

- Notes: ls is an abbreviation of "list", cd is an abbreviation of "change directory"

Example:

- When we login to the server, we are in the folder /home/username.

- We then execute several commands listed below

- Using cd command, we move to folder /tasks/perl/ (the computer does not print anything, only changes the current folder).

- Using ls command, we print all files in the /tasks/perl/ folder.

- Finally we use ls /tasks command to print the folders in /tasks

username@vyuka:~$ cd /tasks/perl/

username@vyuka:/tasks/perl$ ls

fastq-lengths.pl reads-small.fastq reads-tiny-trim1.fastq series.tsv

protocol.txt reads-tiny.fasta reads-tiny-trim2.fastq

reads.fastq reads-tiny.fastq series-small.tsv

username@vyuka:/tasks/perl$ ls /tasks

bash bioinf1 bioinf2 bioinf3 cloud flask make perl python r1 r2

Absolute a relative paths

- Absolute path determines how to get to a given file or folder from the root of the whole filesystem.

- For example /tasks/perl/, /tasks/perl/series.tsv, /home/username etc.

- Individual folders are separated by a slash / in the path.

- Absolute paths start with a slash /.

- Relative path determines how to get to a given file or folder from the current folder.

- For example, if the current folder is /tasks/perl/, the relative path to file /tasks/perl/series.tsv is simply series.tsv

- If the current folder is /tasks/, the relative path to file /tasks/perl/series.tsv is perl/series.tsv

- Relative paths do not start with a slash.

- A relative path can also go "upwards" to the containing folder using ..

- For example, if the current folder is /tasks/perl/, the relative path .. will give us the same as /tasks and ../../home will give us /home

Commands ls, cd and others accept both relative and absolute paths.

Important folders

- Root is the folder with absolute path /, the starting point of the tree structure of folders.

- Home directory with absolute path /home/username is set as the current folder after login.

- Users typically store most of their files within their home directory and its subfolders, if there is no good reason to place them elsewhere.

- Tilde ~ is an abbreviation for your home directory. For example cd ~ will place you there.

Wildcards

- We can use wildcards to work with only selected files in a folder.

- For example, all files starting with letter x in the current folder can be printed as ls x*

- The star represents any number of characters in the filename.

- All files containing letter x anywhere in the name can be printed as ls *x*

Examining file content (less)

- Type less filename

- This will print the first page of the file on your screen. Then you can move around the file using space or keys Page up and Page down. You can quit the viewer pressing letter q (abbreviation of quit). Help with additional keys is accessed by pressing h (abbreviation of help).

- If you have a short file and want to just print it all on your screen, use cat filename

- Try for example the following commands:

less /tasks/perl/reads-small.fastq # move around the file, then press q

cat /tasks/perl/reads-tiny.fasta # see the whole file on the screen

Organizing files and folders

Creating new folders (mkdir)

- To create a new folder (directory), use a command of the form mkdir new_folder_path

- The path to the new folder can be relative or absolute

- For example, assume that we are in the home folder, the following two commands both create a new folder called test and folder test2 within it.

mkdir test

# change the next command according to your username

mkdir /home/username/test/test2

Copying files (cp)

- To copy files, use a command of the form cp source destination

- This will copy file specified as the source to the destination.

- Both source and destination can be specified via absolute or relative paths.

- The destination can be a folder into which the file is copied or an entire name of the copied file.

- We can also copy several files to the same folder as follows: cp file1 file2 file3 destination

Example: Let us assume that the current directory is /home/username and that directories test and test2 were created as above. The following will copy file /tasks/perl/reads-small.fastq to the new directory test and afterwards also to the current folder (which is the home directory). In the third step it will be copied again to the current folder under a new name test.fastq. In the final steps it will be copied to the test directory under this new name as well.

# change the next command according to your username

# this copies an existing file to the home directory using absolute paths

cp /tasks/perl/reads-small.fastq /home/username/

# now we use relative paths to copy the file from home to the new folder called test

cp reads-small.fastq test/

# now the file is copied within current folder under a new filename test.fastq

cp reads-small.fastq test.fastq

# change directory to test

cd test

# copy the file again from the home directory to the test directory under name test.fastq

cp ../test.fastq .

# we can copy several files to a different folder

cp test.fastq reads-small.fastq test2/

- Copying whole folders can be done via cp -r source destination

- While using cp, it is good to add -i option which will warn us in case we are going to overwrite some existing file. For example:

cd ~

cp -i reads-small.fastq test/

- To move files to a new folder or rename them, you can use mv command, which works similarly to cp, i.e. you specify first source, then destination. Option -i can be used here as well.

- Command rm will delete specified files, rm -r whole folders (be very careful!).

- An empty folder can be deleted using rmdir

Beware: be very careful on the command-line

- The command-line will execute whatever you type, it generally does not ask for confirmation, even for dangerous actions.

- You can very easily remove or overwrite some important file by mistake.

- There is no undo.

- Therefore always check your command before pressing Enter. Use -i option for cp, mv, and possibly even rm.

File permissions and other properties

- Linux gives us control over which files we share and which we keep private.

- Each file (and folder) has its owner (usually the user who created it) and a group of users it is assigned to.

- It has three level of permissions: for its owner (abbreviated u, as user), for its group (g) and for every user on the system (o, as other).

- At each level, we can grant the right to read the file (abbreviated r), to write or modify it (abbreviated w) and to execute the file (abbreviated x).

- Permission to execute is important for executable programs but also for folders.

The long form of ls command

- The ls command can be run with switch -l to produce a more detailed information about files

- Here are two lines from the output after running ls -l /tasks/

drwxr-xr-x 2 bbrejova users 4096 Feb 11 22:43 perl

drwxrwxr-x 5 bbrejova teacher 4096 Mar 7 2022 make

- The very first character of each line is d for folders (directories) and - for regular files. Here we see two folders.

- The next three characters give permissions for the user, the next three for the group and the next three of other users. For example folder perl has all three permissions rwx for the user, and all permissions except writing for group and others.

- Column 3 and 4 of the output list the owner and the group assigned to the file.

- Column 5 lists the size (by default in bytes; this can be changed to human-readable sizes, such as gigabytes, using -h switch)

- Finally the line contains the date of the last modification of the file and its name.

Changing file permissions

- File permissions can be changed using chmod command.

- It is followed by change specification in the form of [who][+ or -][which rights]

- Part [who] can be o (others), g (group), u (user), a (all = user+group+others)

- Sign + means adding rights, sign - means removing them

- Rights can be w (write), r (read), x (execute)

- There are also many other possibilities, see documentation

# add read permission to others for file protocol.txt:

chmod o+r protocol.txt

# remove write permissions from others and group for file protocol.txt:

chmod og-w protocol.txt

# add read permissions to everybody for whole folder "data" and its files and subfolders

chmod -r a+r data

See also

Lperl

This lecture is a brief introduction to the Perl scripting language. We recommend revisiting necessary parts of this lecture while working on the exercises.

Homework: #HWperl

Why Perl